Know The Scientist

Shuji Nakamura – the ‘Edison’ of the blue LED revolution

Shuji Nakamura’s journey inventing blue LED is an example of a ‘high risk, but high reward’ strategy for scientific innovation. Moreover, it lends a sneak-peek at how scientific research unfolds in a corporate environment.

If there is ever an inspiring story about perseverance it would be Thomas Edison’s much retold story of the commercially viable incandescent light bulb in 1879.

In response to questions regarding ‘missteps’ with developing the bulb, Edison famously said, “I have not failed 10,000 times—I’ve successfully found 10,000 ways that will not work.”

But scientific research comes with its own complexity and challenges. Edison, arguably, had resources at his disposal. What is it to charter a breakthrough being a scientist in a corporate environment, when all odds are stacked against you?

Today’s EP Know the Scientist explores a scientist, who strived in an environment of desperation, and prevailed despite all odds. Rivaling Edison’s stature a century later, came the Japanese engineer and physicist, Shuji Nakamura.

Nakamura invented the blue-light emitting diode (LED) bulb in 1993, while he was the chief engineer for Nichia Corporation in Japan. The invention of the blue LED set up the second light revolution in the mid-1990s.

Shuji Nakamura holding a blue-LED. Credit: Ladislav Markus / Wikimedia

Blue-LED eluded the electrical industry which needed it to complete the coveted trio of red, green and blue LEDs. Superimposing and tuning the three different colors, it was easy to cover for the entire visible light spectrum.

Smartphone screens, digital signages, traffic lights or spot lights all depended exclusively on LED when they were rolled out.

LED bulbs paved the world for cheaper lighting that was simultaneously energy efficient. They can emit visible light of distinct colors, while consuming at least 75% less electricity on average than incandescent light bulbs. In incandescent bulbs, their bulk energy output is wasted in heat, with only 2% emitted as polychromatic light.

As the world battles for cleaner energy resources, LEDs set off the revolution around the same time when there was consensus already that we need to be more energy efficient.

The ‘high risk, but high reward’ strategy

Nichia Corporation was seeing losses for years in the LED market, despite selling red and infrared LEDs. That’s when Shuji Nakamura, the chief designer developing these LEDs, entered the scene as he came under pressure to come up with a new product of their own. Nakamura pitched an idea to actually researching and inventing a blue-LED – a last minute gamble to save Nichia’s future. It sounds ludicrous that Nakamura aimed to research blue LEDs, when semiconductor physicists of even higher repute worked in well equipped laboratories.

There were two materials that physicists had narrowed down to which had the band gap energy in semiconductors to emit blue light upon stimulation. One was zinc selenide, and the other was gallium nitride. Gallium nitride was deemed difficult to grow a perfect lattice, which meant physicists pushed resources towards unlocking the band gap energy in zinc selenide. In a game of high risk, but high reward strategy, Nakamura decided to dedicate time to research gallium nitride instead!

Gallium nitride crystal. Credit: Opto-p / Wikimedia

The reason was partly because he had a contingency plan in place. In Japan, one can get five papers published to be awarded one. Nakamura was banking on continuing his research until he can get those five papers published, to be assured as PhD. That doesn’t mean he was desperate enough to lose sight of his original goal to research with gallium nitride. By pursuing this semiconductor, he could avoid competition from physicists everywhere else! He had a higher chance of reporting something novel that merits a paper.

Building academic networks

There were other challenges – some important to maintain. A healthy research environment wasn’t assured, despite backing from Nobuo Ogawa, then boss at Nichia. His son, Eiji Ogawa, who succeeded him, had friction with Nakamura. Despite Eiji’s repeated attempts to get Nakamura to pursue research with zinc selenide, Nakamura was stubborn as ever.

As much as Nakamura’s story does sound like a prodigious, lone engineer who made a discovery that escaped many, it isn’t actually true. Nakamura did collaborate occasionally for leads with other scientific researchers while working on gallium nitride.

Japan, for one, has some of the brightest minds in the world at the forefront of scientific research. So did the US.

Representative image of a chemical vapor deposition (CVD) reaction chamber. Credit: NASA / Wikimedia

Nakamura kept abreast of moves on gallium nitride, traveling to Nagoya University within Japan, where Hiroshi Amano and Isamu Akasaki found a way to create the perfect lattice.

In the US, Nakamura learnt the art of designing a ‘metal oxide chemical vapor deposition (MOCVD) reactor’. This is a technique used across material physics to grow a 2D layer of a material over a substrate.

Nakamura built extra modifications to the MOCVD when he was back in Japan. Adding an extra nozzle, Nakamura created the ‘two-flow MOCVD’ technique that at last solved the blue-LED conundrum. And the rest is history.

Nobel Prize and his 70th birthday

Nakamura, along with Amano and Akasaki – his Nagoya University collaborators, shared the 2014 Nobel Prize in Physics, “for the invention of efficient blue light-emitting diodes which has enabled bright and energy-saving white light sources.”

Nichia’s fortunes grew like none before. From a gross receipt of $200 million in 1993, they had $800 million by 2001 – with 60% contribution by sale of Nakamura’s blue-LED technology. Nichia has supplied LEDs for bigger clients including Apple and other electronic companies since then, and remains a market leader in Japan.

However, he received no favors from Nichia. Nichia barely increased his paycheck after his invention that set the precedent for lighting across the world. Nakamura made the decision to leave for the US where he got much better opportunities and a salary. But he left only after fulfilling his backup plan, publishing five papers, to get an engineering PhD from the University of Tokushima in 1995.

Nichia followed him even to the US.

A decade ago, Nichia filed a lawsuit against him claiming intellectual blue LED technology with a company Nakamura worked with. However, Nakamura prevailed and received $8 million in a settlement.

Nakamura, who’ll be a septuagenarian later this March, now holds a position as an engineering professor at the University of California, Santa Barbara, US. Even at 70, he never retired.

An engineer in training, who won a physics prize, his tale brings one that’s never repeated often. A story of undomitable resilience, unwavering in every challenge they face.

Know The Scientist

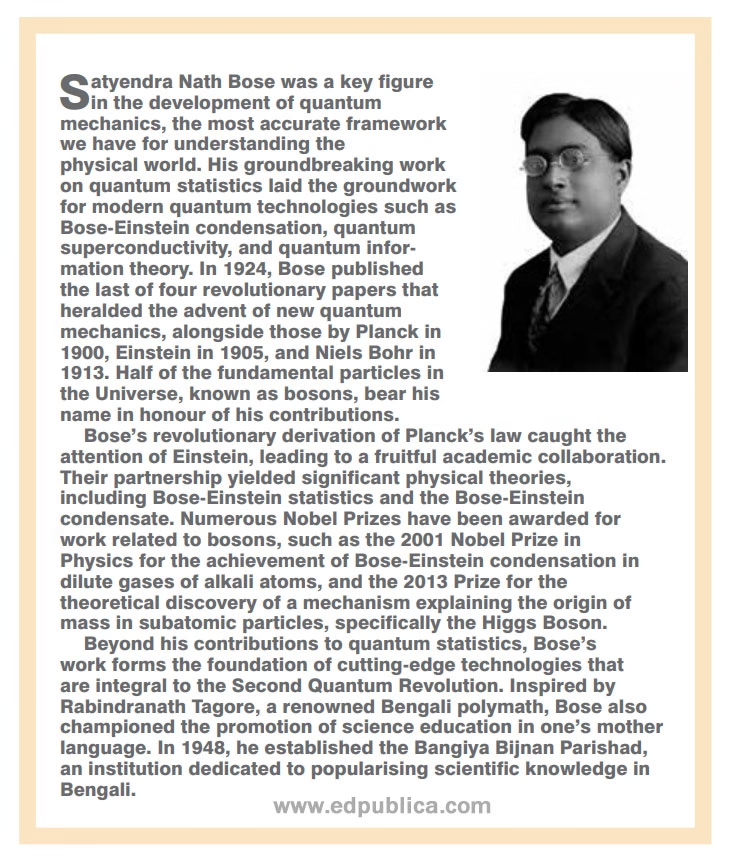

Remembering S.N. Bose, the underrated maestro in quantum physics

Rejected in Britain, celebrated by Einstein, here’s the story of S.N. Bose, the Indian physicist who formulated quantum statistics, now a bedrock theory in condensed matter physics.

It’s 1924, and Satyendra Nath Bose, going by S.N. Bose was a young physicist teaching in Dhaka, then British India. Grappled by an epiphany, he was desperate to have his solution, fixing a logical inconsistency in Planck’s radiation law, get published. He had his eyes on the British Philosophical Magazine, since word could spread to the leading physicists of the time, most if not all in Europe. But the paper was rejected without any explanations offered.

But he wasn’t going to give up just yet. Unrelenting, he sent another sealed envelope with his draft and this time a cover letter again, to Europe. One can imagine months later, Bose breathing out a sigh of relief when he finally got a positive response – from none other than the great man of physics himself – Albert Einstein.

In some ways, Bose and Einstein were similar. Both had no PhDs when they wrote their treatises that brought them into limelight. And Einstein introduced E=mc2 derived from special relativity with little fanfare, so did Bose who didn’t secure a publisher with his groundbreaking work that invented quantum statistics. He produced a novel derivation of the Planck radiation law, from the first principles of quantum theory.

This was a well-known problem that had plagued physicists since Max Planck, the father of quantum physics himself. Einstein himself had struggled time and again, to only have never resolved the problem. But Bose did, and too nonchalantly with a simple derivation from first principles grounded in quantum theory. For those who know some quantum theory, I’m referring to Bose’s profound recognition that the Maxwell-Boltzmann distribution that holds true for ideal gasses, fails for quantum particles. A technical treatment of the problem would reveal that photons, that are particles of light with the same energy and polarization, are indistinguishable from each other, as a result of the Pauli exclusion principle and Heisenberg’s uncertainty principle.

Fascinated and moved by what he read, Einstein was magnanimous enough to have Bose’s paper translated in German and published in the journal, Zeitschrift für Physik in Germany the same year. It would be the beginning of a brief, but productive professional collaboration between the two theoretical physicists, that would just open the doors to the quantum world much wider. Fascinatingly, last July marked the 100 years since Einstein submitted Bose’s paper, “Planck’s law and the quantum hypothesis” on his behalf to Zeitschrift fur Physik.

With the benefit of hindsight, Bose’s work was really nothing short of revolutionary for its time. However, a Nobel Committee member, the Swedish Oskar Klein – and theoretical physicist of repute – deemed it a mere advance in applied sciences, rather than a major conceptual advance. With hindsight again, it’s a known fact that Nobel Prizes are handed in for quantum jumps in technical advancements more than ever before. In fact, the 2001 Nobel Prize in Physics went to Carl Wieman, Eric Allin Cornell, and Wolfgang Ketterle for synthesizing the Bose-Einstein condensate, a prediction made actually by Einstein based on Bose’s new statistics. These condensates are created when atoms are cooled to near absolute zero temperature, thus attaining the quantum ground state. Atoms at this state possess some residual energy, or zero-point energy, marking a macroscopic phase transition much like a fourth state of matter in its own right.

Such were the changing times that Bose’s work received much attention gradually. To Bose himself, he was fine without a Nobel, saying, “I have got all the recognition I deserve”. A modest character and gentleman, he resonates a lot with the mental image of a scientist who’s a servant to the scientific discipline itself.

But what’s more upsetting is that, Bose is still a bit of a stranger in India, where he was born and lived. He studied physics at the Presidency College, Calcutta under the tutelage that saw other great Indian physicists, including Jagdish Chandra Bose and Meghnad Saha. He was awarded the Padma Vibhushan, the highest civilian award by the Government of India in 1954. Institutes have been named in his honour, but despite this, his reputation has little if no mention at all in public discourse.

To his physicists’ peers in his generation and beyond, he was recognized in scientific lexicology. Paul Dirac, the British physicist coined the name ‘bosons’ in Bose’s honor (‘bose-on’). These refer to quantum particles including photons and others with integer quantum spins, a formulation that arose only because of Bose’s invention of quantum statistics. In fact, the media popular, ‘god particle’, the Higgs boson, carries a bit of Bose as much as it does of Peter Higgs who shared the 2013 Nobel Prize in Physics with Francois Euglert for producing the hypothesis.

Know The Scientist

Narlikar – the rare Indian scientist who penned short stories

Jayant Narlikar has been one of the most prolific scientists, and science communicators India has ever produced. The octogenarian had died at his residence in Pune.

Jayant Narlikar passed away at his Pune residence on Tuesday. He was 86-years old, and had been diagnosed with cancer. With his demise, India lost a prolific scientist, writer, and institution builder.

In 2004, the government of India had honored Narlikar with the Padma Vibhushan, the second-highest civilian award, for his services to science and society. But that was not his first recognition from the Indian government. At the age of 26, he had received his first Padma Bhushan, in recognition for his work in cosmology, studying the universe’s large-scale structures. He helped contribute to derive Einstein’s field equations of gravity from a more general theory. That work, dubbed the Narlikar-Hoyle theory of gravity, was borne out a collaboration with Narlikar’s doctoral degree supervisor at Cambridge; Fred Hoyle, the then leading astrophysicist of his time.

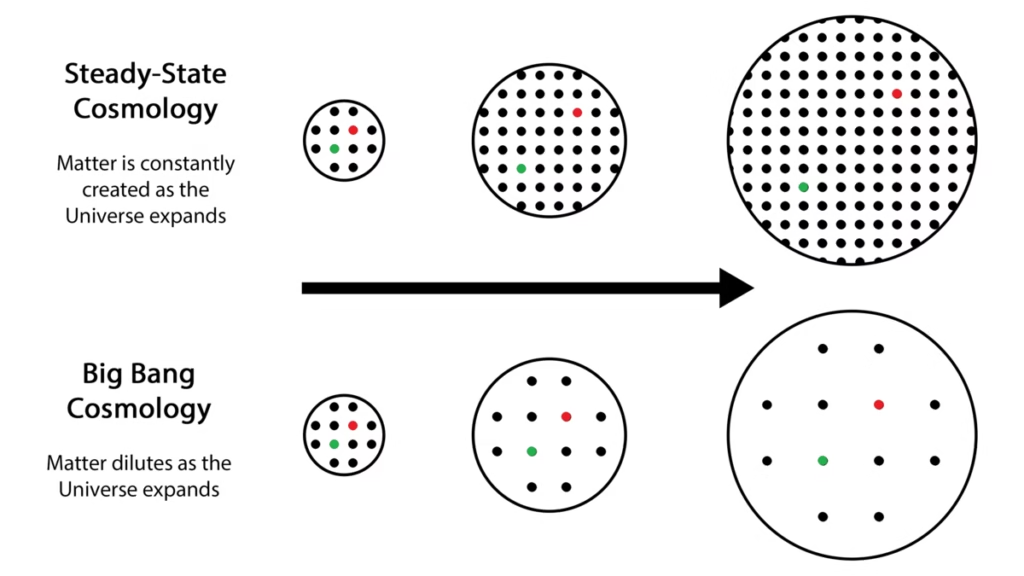

Narlikar and the steady-state theory

Narlikar and Hoyle bonded over a shared skepticism towards the prevalent Big Bang hypothesis, which sought to extrapolate the universe’s ongoing expansion to its birth at some finite time in the past. However, Narlikar and Hoyle could not have been more opposed, mostly out of their own philosophical beliefs. They drew upon the works of 19th century Austrian physicist and philosopher, Ernest Mach, in rejecting a theory discussing the universe’s beginning in the absence of a reference frame. As such, Narlikar was a strong proponent of Hoyle’s steady-state model of the universe, in which the universe is infinite in extent, and indefinitely old. As such, the steady-state theorists explained away the universe’s expansion to matter being spawned into existence from this vacuum at every instant, aka a C-field.

However, the steady-state’s predictions did not hold up in face of evidence the universe expands over time. Nor did its successive avatar, the quasi-steady state theory devised sway scientific consensus. The death knell came when evidence of the cosmic microwave background (aka the CMB) was discovered in 1964.

Despite steady-state’s failure, it provided healthy rivalry to the Big Bang from the 1940s to the 60s, providing opportunities for astronomers to compare observations to precise predictions. In the words of the Nobel laureate Steven Weinberg, “In a sense, this disagreement is a credit to the model; alone among all cosmologies, the steady state model makes such definite predictions that it can be disproved even with the limited observational evidence at our disposal.”

The Kalinga winning short-story writer

Narlikar was more than just a cosmologist, studying the large-scale structure of the universe. He also had been an acclaimed science fiction writer, with his works penned in English, Hindi, and in his vernacular, Marathi. His famous work was a short-story, Dhoomekethu (The Comet), revolving around themes of superstition, faith, rational and scientific thinking. Published in Marathi in 1976, with translations available in Hindi, the story was adapted later into a two-hour film bearing the same name. In 1985, the film aired on the state-owned television broadcasting channels, Doordarshan.

In a way, he was India’s Carl Sagan, airing episodes explaining astronomical concepts, with children being his target audience. The seventeen-episode show, Brahmand (The Universe), aired in 1994, to popular acclaim. One of his most popular books, Akashashi Jadle Nathe (Sky-Rooted Relationship), remains popular. An e-book version in Hindi is available on Goodreads, with 470 reviewers lending an average rating of 4.7 out of 5.

His efforts was honored with an international prize. In 1996, he received the much-coveted Kalinga Prize for the Popularization of Science, awarded annually in India by the United Nations Educational, Scientific and Cultural Organization (UNESCO), “in recognition of his efforts to popularize science through print and electronic media.” Narlikar had been only the second Indian at the time, after the popular science writer Jagjit Singh, to have received the award.

When Narlikar returned to India, accepting a position at the Tata Institute of Fundamental Research (TIFR), he realized that the fruits of astrophysical research did not flourish outside central institutions. Though Bengaluru had an Indian Institute of Astrophysics, Narlikar envisioned basing a research culture paralleling his time at Cambridge. Hence, the Inter-University Centre for Astronomy and Astrophysics (IUCAA) was born in 1988, and Narlikar was appointed its founding director. Arguably, his most visible legacy would have been to shape India’s astrophysical research culture through his work with the IUCAA (pronounced “eye-you-ka”).

Space & Physics

Dr. Nikku Madhusudhan Brings Us Closer to Finding Life Beyond Earth

Dr. Madhusudhan, a leading Indian-British astrophysicist at the University of Cambridge, has long been on the frontlines of the search for extraterrestrial life

Somewhere in the vast, cold dark of the cosmos, a planet orbits a distant star. It’s not a place you’d expect to find life—but if Dr. Nikku Madhusudhan is right, that assumption may soon be history.

Dr. Madhusudhan, a leading Indian-British astrophysicist at the University of Cambridge, has long been on the frontlines of the search for extraterrestrial life or what we call the alien life. This month, his team made headlines around the world after revealing what could be the strongest evidence yet of life beyond Earth—on a distant exoplanet known as K2-18b.

Using data from NASA’s James Webb Space Telescope, Madhusudhan and his collaborators detected atmospheric signatures of molecules commonly associated with biological processes on Earth—specifically, gases produced by marine phytoplankton and certain bacteria. Their analysis suggests a staggering 99.7% probability that these molecules could be linked to living organisms.

“This marked the first detection of carbon-bearing molecules in the atmosphere of an exoplanet located within the habitable zone,” the University of Cambridge said in a press statement. “The findings align with theoretical models of a ‘Hycean’ planet — a potentially habitable, ocean-covered world enveloped by a hydrogen-rich atmosphere.”

Born in India, Dr. Madhusudhan began his journey in science with an engineering degree from IIT (BHU) Varanasi

In addition, a fainter signal suggested there could be other unexplained processes occurring on K2-18b. “We didn’t know for sure whether the signal we saw last time was due to DMS, but just the hint of it was exciting enough for us to have another look with JWST using a different instrument,” said Professor Nikku Madhusudhan in a news report released by the University of Cambridge.

The man behind the mission

Born in India, Dr. Madhusudhan began his journey in science with an engineering degree from IIT (BHU) Varanasi. But it was during his time at the Massachusetts Institute of Technology (MIT), under the mentorship of exoplanet pioneer Prof. Sara Seager, that he found his calling. His doctoral work—developing methods to retrieve data from exoplanet atmospheres—would go on to form the backbone of much of today’s planetary climate modeling.

Now a professor at the University of Cambridge’s Institute of Astronomy, Madhusudhan leads research that straddles the line between science fiction and frontier science.

A Universe of Firsts

Over the years, his work has broken new ground in our understanding of alien worlds. He was among the first to suggest the concept of “Hycean planets”—oceans of liquid water beneath hydrogen-rich atmospheres, conditions which may be ideal for life. He also led the detection of titanium oxide in the atmosphere of WASP-19b and pioneered studies of K2-18b, the same exoplanet now back in the spotlight.

His team’s recent findings on K2-18b may be the closest humanity has ever come to detecting life elsewhere in the universe.

Accolades and impact

Madhusudhan’s contributions have earned him global recognition. He received the prestigious IUPAP Young Scientist Medal in 2016 and the MERAC Prize in Theoretical Astrophysics in 2019. In 2014, the Astronomical Society of India awarded him the Vainu Bappu Gold Medal for outstanding contributions to astrophysics by a scientist under 35.

But for Madhusudhan, the real reward lies in the questions that remain unanswered.

Looking ahead

Madhusudhan cautions that, while the findings are promising, more data is needed before drawing conclusions about the presence of life on another planet. He remains cautiously optimistic but notes that the observations on K2-18b could also be explained by previously unknown chemical processes. Together with his colleagues, he plans to pursue further theoretical and experimental studies to investigate whether compounds like DMS and DMDS could be produced through non-biological means at the levels currently detected.

Beyond the lab, Madhusudhan remains dedicated to mentoring students and advancing scientific outreach. He’s a firm believer that the next big discovery might come from a student inspired by the stars, just as he once was.

As scientists prepare for the next wave of data and the world watches closely, one thing is clear: thanks to minds like Dr. Nikku Madhusudhan’s, the search for life beyond Earth is no longer a distant dream—it’s a scientific reality within reach.

-

Space & Physics6 months ago

Space & Physics6 months agoIs Time Travel Possible? Exploring the Science Behind the Concept

-

Know The Scientist6 months ago

Know The Scientist6 months agoNarlikar – the rare Indian scientist who penned short stories

-

Know The Scientist5 months ago

Know The Scientist5 months agoRemembering S.N. Bose, the underrated maestro in quantum physics

-

Space & Physics3 months ago

Space & Physics3 months agoJoint NASA-ISRO radar satellite is the most powerful built to date

-

Society5 months ago

Society5 months agoAxiom-4 will see an Indian astronaut depart for outer space after 41 years

-

Society5 months ago

Society5 months agoShukla is now India’s first astronaut in decades to visit outer space

-

Society5 months ago

Society5 months agoWhy the Arts Matter As Much As Science or Math

-

Earth5 months ago

Earth5 months agoWorld Environment Day 2025: “Beating plastic pollution”