Space & Physics

In search for red aurorae in ancient Japan

Ryuho Kataoka, a Japanese auroral scientist, played a seminal role in searching for evidence of super-geomagnetic storms in the past using historical methods

Aurorae seen on Earth are the end of a complex process that begins with a violent, dynamic process deep within the sun’s interior.

However, studying the depths of the sun is no easy task, even for scientists. The best they can do is to observe the surface using space-based telescopes. One problem that scientists are attempting to solve is how a super-geomagnetic storm on Earth comes to being. These geomagnetic storms find their roots in sunspots, that are acne-like depressions on the sun’s surface. As the sun approaches the peak of its 11-year solar cycle, these sunspots, numbering in the hundreds, occasionally release all that stored magnetic energy into deep space, in the form of coronal mass ejections (CMEs) (which are hot wisps of gas superheated to thousands of degrees).

Super-geomagnetic storms, a particularly worse form of geomagnetic storm, can induce power surges in our infrastructure, causing power outages that can plunge the world into darkness, and can cause irreversible damages to our infrastructure

If the earth lies in the path of an oncoming CME, the energy release from their resultant magnetic field alignment can cause intense geomagnetic storms and aurorae on Earth.

This phenomenon, which is astrophysical and also electromagnetic in nature, can have serious repercussions for our modern technological society.

Super-geomagnetic storms, a particularly worse form of geomagnetic storm, can induce power surges in our infrastructure, causing power outages that can plunge the world into darkness, and can cause irreversible damages to our infrastructure. The last recorded super-geomagnetic storm event occurred more than 150 years ago. Known as the Carrington event, the storm destroyed telegraph lines across North America and Europe in 1859. The risk for a Carrington-class event to happen again was estimated to be 1 in 500-years, which is quite low, but based on limited data. Ramifications are extremely dangerous if it were to ever happen.

However, in the past decade, it was learnt that such super-geomagnetic storms are much more common than scientists had figured. To top it all, it wasn’t just science, but it was a valuable contribution by art – specifically ancient Japanese and Chinese historical records that shaped our modern understanding of super-geomagnetic storms.

Ryuho Kataoka, a Japanese space physicist, played a seminal role in searching for evidence of super-geomagnetic storms in the past using historical methods. He is presently an associate professor in physics, holding positions at Japan’s National Institute of Polar Research, and The Graduate University for Advanced Studies.

“There is no modern digital dataset to identify extreme space weather events, particularly super-geomagnetic storms,” said Professor Kataoka. “If you have good enough data, we can input them into supercomputers to do physics-based simulation.”

However, sunspot records go until the late 18th century when sunspots were actively being cataloged. In an effort to fill the data gap, Professor Kataoka decided to be at the helm of a very new but promising interdisciplinary field combining the arts with space physics. “The data is limited by at least 50 years,” said Professor Kataoka. “So we decided to search for these red vapor events in Japanese history, and see the occurrence patterns … and if we are lucky enough, we can see detailed features in these lights, pictures or drawings.” Until the summer of 2015, Ryuho Kataoka wasn’t aware of how vast ancient Japanese and Chinese history records really were.

“There is no modern digital dataset to identify extreme space weather events, particularly super-geomagnetic storms,” said Professor Kataoka.

In the past 7 years, he’s researched a very specific red aurora, in documents extending to more than 1400 years. “Usually, auroras are known for their green colors – but during the geomagnetic storm, the situation is very different,” he said. “Red is of course unusual, but we can only see red during a powerful geomagnetic storm, especially in lower latitudes. From a scientific perspective, it’s a very reasonable way to search for red signs in historical documents.”

A vast part of these historical red aurora studies that Professor Kataoka researched came from literature explored in the last decade by the AURORA-4D collaboration. “The project title included “4D”, because we wanted to access records dating back 400 years back during the Edo period,” said Professor Kataoka.

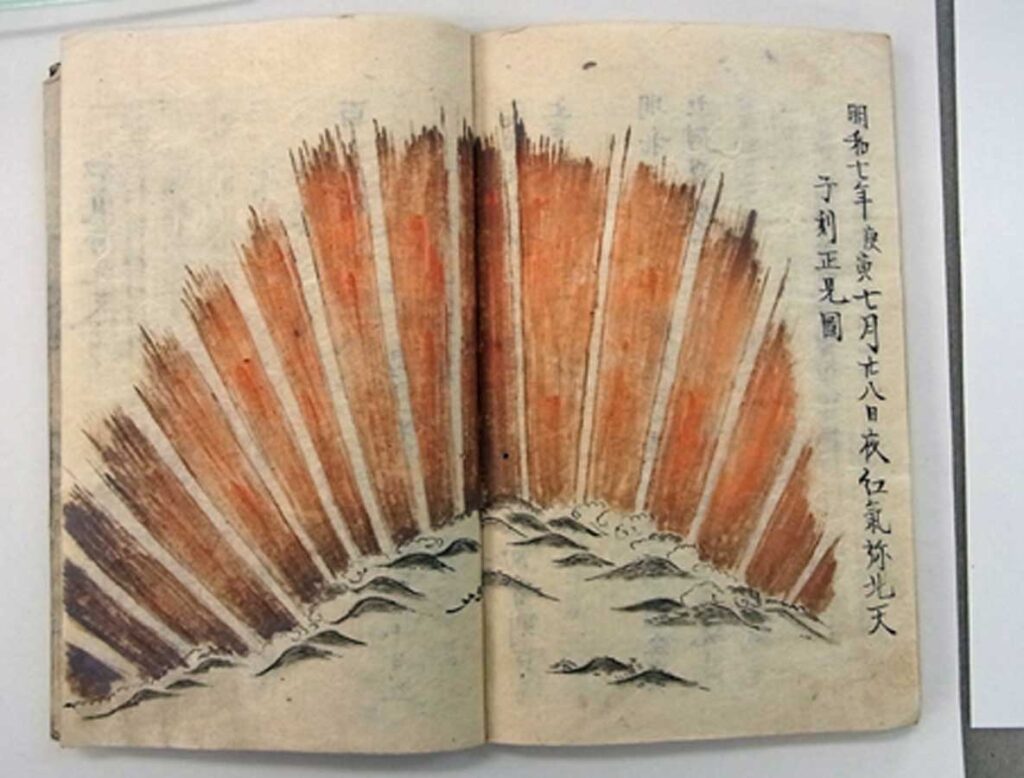

“From the paintings, we can identify the latitude of the aurora, and calculate the magnitude or amplitude of the geomagnetic storm.” Clearly, paintings in the Edo period influenced Professor Kataoka’s line of research, for a copy of the fan-shaped red aurora painting from the manuscript Seikai (which translates to ‘stars’) hangs on the window behind his office desk at the National Institute of Polar Research.

The painting fascinated Professor Kataoka, since it depicted an aurora that originated during a super-geomagnetic storm over Kyoto in 1770. However, the painting did surprise him at first, since he wondered whether the radial patterns in the painting were real, or a mere artistic touch to make it look fierier. “That painting was special because this was the most detailed painting preserved in Japan,” remarked Professor Kataoka. “I took two years to study this, thinking this appearance was silly as an aurorae scientist. But when I calculated the field pattern from Kyoto towards the North, it was actually correct!”

Fan-shaped red aurora painting from the ‘Seikai’, dated 17th September, 1770; Picture Courtesy: Matsusaka City, Mie Prefecture.

The possibility to examine and verify historical accounts using science is also a useful incentive for scholars of Japanese literature and scientists partaking in the research.

“This is important because, if we scientists look at the real National Treasure with our eyes, we really know these sightings recorded were real,” said Professor Kataoka. “The internet is really bad for a survey because it can easily be very fake,” he said laughing. It’s not just the nature in which science was used to examine art – to examine Japanese “national treasures” that is undoubtedly appealing, but historical accounts themselves have contributed to scientific research directly.

“From our studies, we can say that the Carrington class events are more frequent than we previously expected,” said Professor Kataoka. There was a sense of pride in him as he said this. “This Carrington event is not a 1 in 200-year event, but as frequent as 1 in 100 years.” Given how electricity is the lifeblood of the 21st century, these heightened odds do ingrain a rather dystopian society in the future, that is ravaged by a super-geomagnetic storm.

Professor Kataoka’s work has found attention within the space physics community. Jonathon Eastwood, Professor of Physics at Imperial College London said to EdPublica, “The idea to use historical information and art like this is very inventive because these events are so rare and so don’t exist as information in the standard scientific record.”

There’s no physical harm from a geomagnetic storm, but the threat to global power supply and electronics is being increasingly recognized by world governments. The UK, for instance, identified “space weather” as a natural hazard in its 2011 National Risk Register. In the years that followed, the government set up a space weather division in the Met Office, the UK’s foremost weather forecasting authority, to monitor and track occurrences of these coronal mass ejections. However, these forecasts, which often supplement American predictions – namely the National Oceanic and Atmospheric Administration (NOAA) – have failed to specify previously where a magnetic storm could brew on Earth, or predict whether a coronal mass ejection would ever actually strike the Earth.

Professor Kataoka said he wishes space physicists from other countries participate in similar interdisciplinary collaborations to explore their native culture’s historical records for red aurora sightings

The former occurred during the evacuation process for Hurricane Irma in 2017, when amateur radio ham operators experienced the effects of a radio blackout when a magnetic storm affected the communications network across the Caribbean. The latter occurred on another occasion when a rocket launch for SpaceX’s Starlink communication satellites was disrupted by a mild geomagnetic storm, costing SpaceX a loss of over $40 million.

Professor Kataoka said he wishes space physicists from other countries participate in similar interdisciplinary collaborations to explore their native culture’s historical records for red aurora sightings. He said the greatest limitation of the AURORA-4D collaboration was the lack of historical records from other parts of the world. China apparently boasts a history of aurora records longer than Japan, with a history lasting before Christ himself. “Being Japanese, I’m not familiar with British, Finnish or Vietnamese cultures,” said Professor Kataoka. “But every country has literature researchers and scientists who can easily collaborate and perform interdisciplinary research.” And by doing so, it’s not just science which benefits from it, but so is ancient art whose beauty and relevance gains longevity.

Space & Physics

Nobel Prize in Physics: Clarke, Devoret, and Martinis Honoured for Pioneering Quantum Discoveries

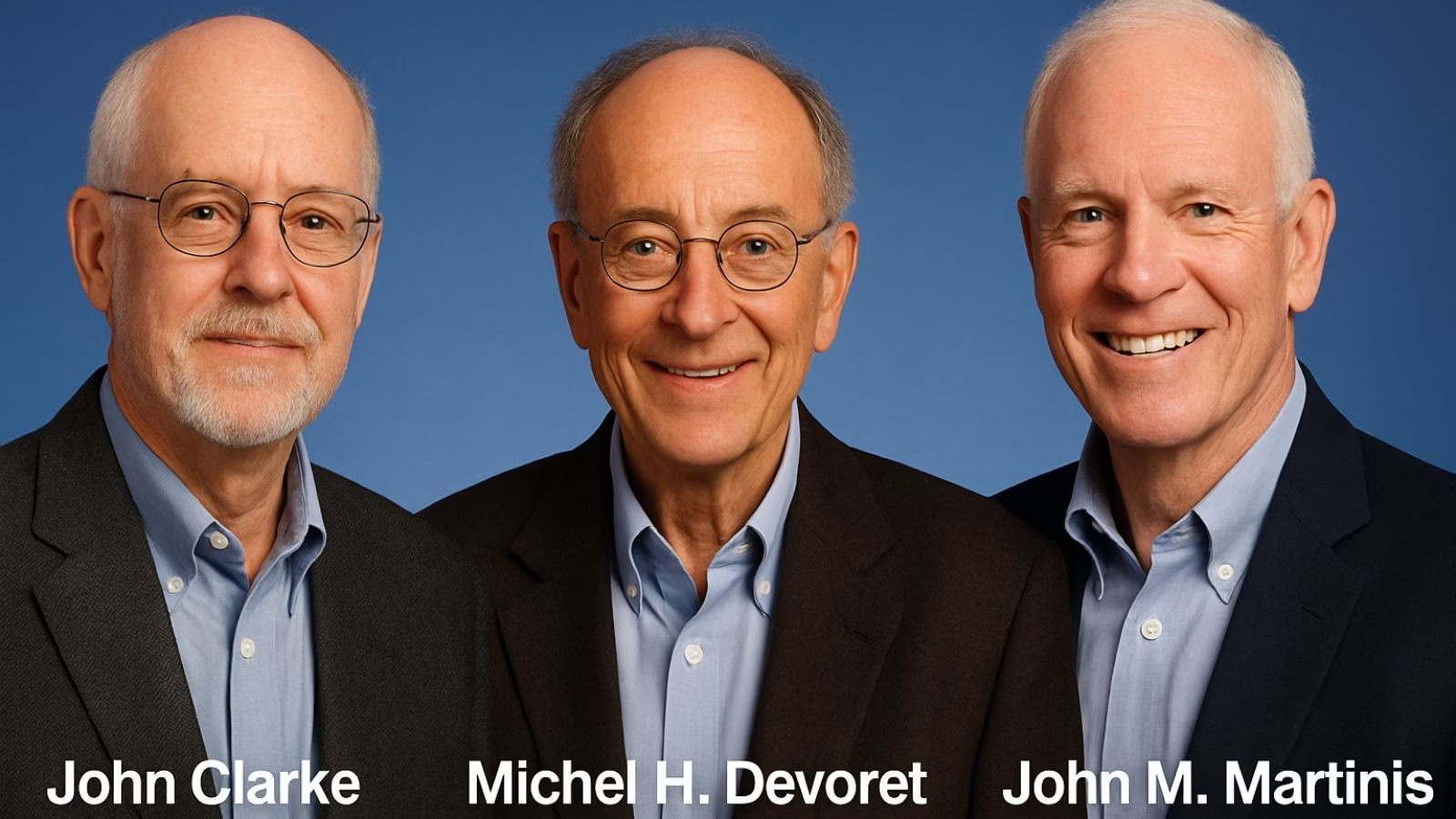

The 2025 Nobel Prize in Physics honours John Clarke, Michel H. Devoret, and John M. Martinis for revealing how entire electrical circuits can display quantum behaviour — a discovery that paved the way for modern quantum computing.

The 2025 Nobel Prize in Physics has been awarded to John Clarke, Michel H. Devoret, and John M. Martinis for their landmark discovery of macroscopic quantum mechanical tunnelling and energy quantisation in an electric circuit, an innovation that laid the foundation for today’s quantum computing revolution.

Announcing the prize, Olle Eriksson, Chair of the Nobel Committee for Physics, said, “It is wonderful to be able to celebrate the way that century-old quantum mechanics continually offers new surprises. It is also enormously useful, as quantum mechanics is the foundation of all digital technology.”

The Committee described their discovery as a “turning point in understanding how quantum mechanics manifests at the macroscopic scale,” bridging the gap between classical electronics and quantum physics.

John Clarke: The SQUID Pioneer

British-born John Clarke, Professor Emeritus at the University of California, Berkeley, is celebrated for his pioneering work on Superconducting Quantum Interference Devices (SQUIDs) — ultra-sensitive detectors of magnetic flux. His career has been marked by contributions that span superconductivity, quantum amplifiers, and precision measurements.

Clarke’s experiments in the early 1980s provided the first clear evidence of quantum behaviour in electrical circuits — showing that entire electrical systems, not just atoms or photons, can obey the strange laws of quantum mechanics.

A Fellow of the Royal Society, Clarke has been honoured with numerous awards including the Comstock Prize (1999) and the Hughes Medal (2004).

Michel H. Devoret: Architect of Quantum Circuits

French physicist Michel H. Devoret, now the Frederick W. Beinecke Professor Emeritus of Applied Physics at Yale University, has been one of the intellectual architects of quantronics — the study of quantum phenomena in electrical circuits.

After earning his PhD at the University of Paris-Sud and completing a postdoctoral fellowship under Clarke at Berkeley, Devoret helped establish the field of circuit quantum electrodynamics (cQED), which underpins the design of modern superconducting qubits.

His group’s innovations — from the single-electron pump to the fluxonium qubit — have set performance benchmarks in quantum coherence and control. Devoret is also a recipient of the Fritz London Memorial Prize (2014) and the John Stewart Bell Prize, and is a member of the French Academy of Sciences.

John M. Martinis: Building the Quantum Processor

American physicist John M. Martinis, who completed his PhD at UC Berkeley under Clarke’s supervision, translated these quantum principles into the hardware era. His experiments demonstrated energy level quantisation in Josephson junctions, one of the key results now honoured by the Nobel Committee.

Martinis later led Google’s Quantum AI lab, where his team in 2019 achieved the world’s first demonstration of quantum supremacy — showing a superconducting processor outperforming the fastest classical supercomputer on a specific task.

A former professor at UC Santa Barbara, Martinis continues to be a leading voice in quantum computing research and technology development.

A Legacy of Quantum Insight

Together, the trio’s discovery, once seen as a niche curiosity in superconducting circuits, has become the cornerstone of the global quantum revolution. Their experiments proved that macroscopic electrical systems can display quantised energy states and tunnel between them, much like subatomic particles.

Their work, as the Nobel citation puts it, “opened a new window into the quantum behaviour of engineered systems, enabling technologies that are redefining computation, communication, and sensing.”

Space & Physics

The Tiny Grip That Could Reshape Medicine: India’s Dual-Trap Optical Tweezer

Indian scientists build new optical tweezer module—set to transform single-molecule research and medical Innovation

In an inventive leap that could open up new frontiers in neuroscience, drug development, and medical research, scientists in India have designed their own version of a precision laboratory tool known as the dual-trap optical tweezers system. By creating a homegrown solution to manipulate and measure forces on single molecules, the team brings world-class technology within reach of Indian researchers—potentially igniting a wave of scientific discoveries.

Optical tweezers, a Nobel Prize-winning invention from 2018, use focused beams of light to grab and move microscopic objects with extraordinary accuracy. The technique has become indispensable for measuring tiny forces and exploring the mechanics of DNA, proteins, living cells, and engineered nanomaterials. Yet, decades after their invention, conventional optical tweezers systems sometimes fall short for today’s most challenging experiments.

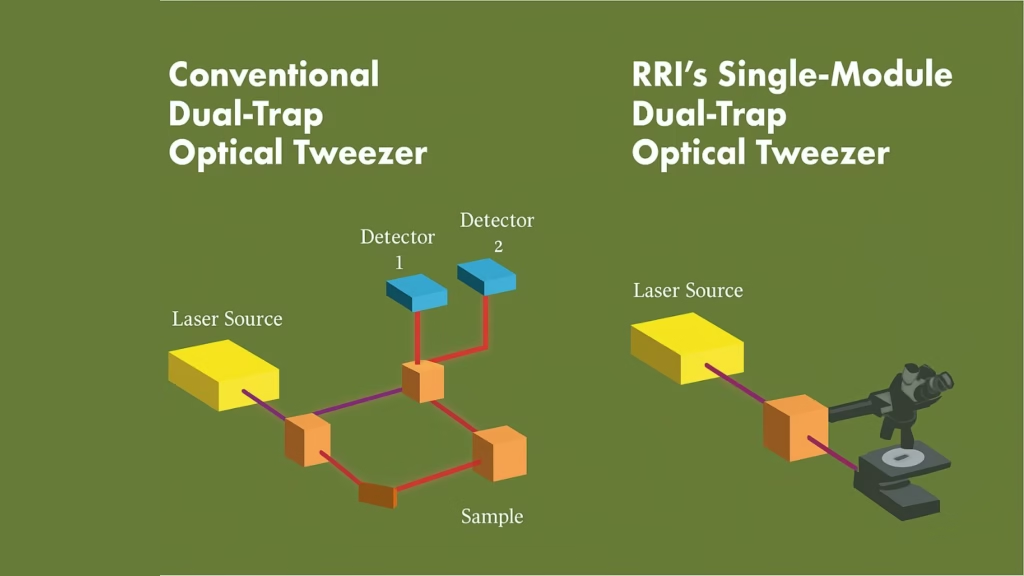

Researchers at the Raman Research Institute (RRI), an autonomous institute backed by India’s Department of Science and Technology in Bengaluru, have now introduced a smart upgrade that addresses long-standing pitfalls of dual-trap tweezers. Traditional setups rely on measuring the light that passes through particles trapped in two separate beams—a method prone to signal “cross-talk.” This makes simultaneous, independent measurement difficult, diminishing both accuracy and versatility.

The new system pioneers a confocal detection scheme. In a media statement, Md Arsalan Ashraf, a doctoral scholar at RRI, explained, “The unique optical trapping scheme utilizes laser light scattered back by the sample for detecting trapped particle position. This technique pushes past some of the long-standing constraints of dual-trap configurations and removes signal interference. The single-module design integrates effortlessly with standard microscopy frameworks,” he said.

The refinement doesn’t end there. The system ensures that detectors tracking tiny particles remain perfectly aligned, even when the optical traps themselves move. The result: two stable, reliable measurement channels, zero interference, and no need for complicated re-adjustment mid-experiment—a frequent headache with older systems.

Traditional dual-trap designs have required costly and complex add-ons, sometimes even hijacking the features of laboratory microscopes and making additional techniques, such as phase contrast or fluorescence imaging, hard to use. “This new single-module trapping and detection design makes high-precision force measurement studies of single molecules, probing of soft materials including biological samples, and micromanipulation of biological samples like cells much more convenient and cost-effective,” said Pramod A Pullarkat, lead principal investigator at RRI, in a statement.

By removing cross-talk and offering robust stability—whether traps are close together, displaced, or the environment changes—the RRI team’s approach is not only easier to use but far more adaptable. Its plug-and-play module fits onto standard microscopes without overhauling their basic structure.

From the intellectual property point of view, this design may be a game-changer. By cracking the persistent problem of signal interference with minimalist engineering, the new setup enhances measurement precision and reliability—essential advantages for researchers performing delicate biophysical experiments on everything from molecular motors to living cells.

With the essential building blocks in place, the RRI team is now exploring commercial avenues to produce and distribute their single-module, dual-trap optical tweezer system as an affordable add-on for existing microscopes. The innovation stands to put advanced single-molecule force spectroscopy, long limited to wealthier labs abroad, into the hands of scientists across India—and perhaps spark breakthroughs across the biomedical sciences.

Space & Physics

New Magnetic Transistor Breakthrough May Revolutionize Electronics

A team of MIT physicists has created a magnetic transistor that could make future electronics smaller, faster, and more energy-efficient. By swapping silicon for a new magnetic semiconductor, they’ve opened the door to game-changing advancements in computing.

For decades, silicon has been the undisputed workhorse in transistors—the microscopic switches responsible for processing information in every phone, computer, and high-tech device. But silicon’s physical limits have long frustrated scientists seeking ever-smaller, more efficient electronics.

Now, MIT researchers have unveiled a major advance: they’ve replaced silicon with a magnetic semiconductor, introducing magnetism into transistors in a way that promises tighter, smarter, and more energy-saving circuits. This new ingredient, chromium sulfur bromide, makes it possible to control electricity flow with far greater efficiency and could even allow each transistor to “remember” information, simplifying circuit design for future chips.

“This lack of contamination enables their device to outperform existing magnetic transistors. Most others can only create a weak magnetic effect, changing the flow of current by a few percent or less. Their new transistor can switch or amplify the electric current by a factor of 10,” the MIT team said in a media statement. Their work, detailed in Physical Review Letters, outlines how this material’s stability and clean switching between magnetic states unlocks a new degree of control.

Chung-Tao Chou, MIT graduate student and co-lead author, explains in a media statement, “People have known about magnets for thousands of years, but there are very limited ways to incorporate magnetism into electronics. We have shown a new way to efficiently utilize magnetism that opens up a lot of possibilities for future applications and research.”

The device’s game-changing aspect is its ability to combine the roles of memory cell and transistor, allowing electronics to read and store information faster and more reliably. “Now, not only are transistors turning on and off, they are also remembering information. And because we can switch the transistor with greater magnitude, the signal is much stronger so we can read out the information faster, and in a much more reliable way,” said Luqiao Liu, MIT associate professor, in a media statement.

Moving forward, the team is looking to scale up their clean manufacturing process, hoping to create arrays of these magnetic transistors for broader commercial and scientific use. If successful, the innovation could usher in a new era of spintronic devices, where magnetism becomes as central to electronics as silicon is today.

-

Space & Physics5 months ago

Space & Physics5 months agoIs Time Travel Possible? Exploring the Science Behind the Concept

-

Earth6 months ago

Earth6 months ago122 Forests, 3.2 Million Trees: How One Man Built the World’s Largest Miyawaki Forest

-

Space & Physics6 months ago

Space & Physics6 months agoDid JWST detect “signs of life” in an alien planet?

-

Know The Scientist5 months ago

Know The Scientist5 months agoNarlikar – the rare Indian scientist who penned short stories

-

Society4 months ago

Society4 months agoShukla is now India’s first astronaut in decades to visit outer space

-

Society4 months ago

Society4 months agoAxiom-4 will see an Indian astronaut depart for outer space after 41 years

-

Earth4 months ago

Earth4 months agoWorld Environment Day 2025: “Beating plastic pollution”

-

Society6 months ago

Society6 months agoRabies, Bites, and Policy Gaps: One Woman’s Humane Fight for Kerala’s Stray Dogs