The Sciences

IIT Kanpur researchers visualize Duffy antigen receptor, advancing the fight against malaria and HIV

Researchers achieve landmark visualization of key Cell Receptor, paving way for new Drugs against infectious diseases. The new milestone can lead the way in combating drug-resistant infections and advancing the fight against diseases like malaria and HIV

A research team from the Indian Institute of Technology Kanpur (IITK), led by Prof. Arun K. Shukla from the Department of Biological Sciences and Bioengineering, has achieved a major scientific milestone by visualizing the complete structure of the Duffy antigen receptor for the first time. This receptor protein, located on the surface of red blood cells and other cells, serves as an entry point for harmful pathogens, including the malaria parasite Plasmodium vivax and the bacterium Staphylococcus aureus.

The groundbreaking research, published in peer-reviewed journal Cell, provides valuable new insights for scientists tackling antimicrobial drug resistance. With drug-resistant infections on the rise, this detailed visualization of the Duffy receptor structure could lead to significant advances in developing new therapies for drug-resistant malaria, Staphylococcus infections, and potentially other diseases like HIV.

“For years, researchers worldwide have been working to unravel the secrets of the Duffy antigen receptor due to its role as a ‘gateway’ that helps bacteria and parasites invade our cells and cause disease. Our achievement in finally visualizing this receptor at high resolution will enhance our understanding of how pathogens exploit it to infect cells,” said Prof. Arun K. Shukla from IIT Kanpur.

According to Prof. Arun K. Shukla, this knowledge will aid in the design of next-generation medicines, including new antibiotics and antimalarials, particularly as we face increasing antimicrobial resistance

According to him, this knowledge will aid in the design of next-generation medicines, including new antibiotics and antimalarials, particularly as we face increasing antimicrobial resistance.

“While the Duffy antigen receptor is common in most populations, a significant percentage of people of African descent lack this receptor on their red blood cells due to a genetic variation. As a result, they are naturally resistant to certain types of malaria parasites that rely on this specific ‘gateway’ to infect the cells. This highlights the crucial role of the Duffy antigen receptor in these diseases and suggests that targeting it could lead to new treatments,” added Prof. Shukla.

The research team utilised cutting-edge cryogenic electron microscopy (cryo-EM) to reveal the intricate architecture of the Duffy antigen receptor, illuminating its unique structural features and distinguishing it from similar receptors in the human body. This detailed insight is essential for designing highly targeted therapies that can effectively block infections while minimising unwanted side effects.

Prof. Manindra Agrawal, Director, IIT Kanpur said, “This remarkable achievement is a result of our institution’s support to cutting-edge research that addresses real-world problems and solidifies our standing on the global scientific stage. This will enhance our understanding of infectious diseases and help develop therapies for drug-resistant pathogen.”

The research team comprised Shirsha Saha, Jagannath Maharana, Saloni Sharma, Nashrah Zaidi, Annu Dalal, Sudha Mishra, Manisankar Ganguly, Divyanshu Tiwari, Ramanuj Banerjee, and Prof. Arun Kumar Shukla from IIT Kanpur. Additionally, researchers from CDRI Lucknow, Zurich in Switzerland, Suwon in the Republic of Korea, Tohoku in Japan, and Belfast in the United Kingdom also contributed to the study. This research was primarily funded by the Department of Biotechnology (DBT), the Department of Science and Technology (DST), the Science and Engineering Research Board (SERB), the DBT Wellcome Trust India Alliance, and IIT Kanpur.

The Sciences

Most Earthquake Energy Is Spent Heating Up Rocks, Not Shaking the Ground: New MIT Study Finds

How do earthquakes spend their energy? MIT’s latest research shows heat—not ground motion—is the main outcome of a quake, reshaping how scientists understand seismic risks

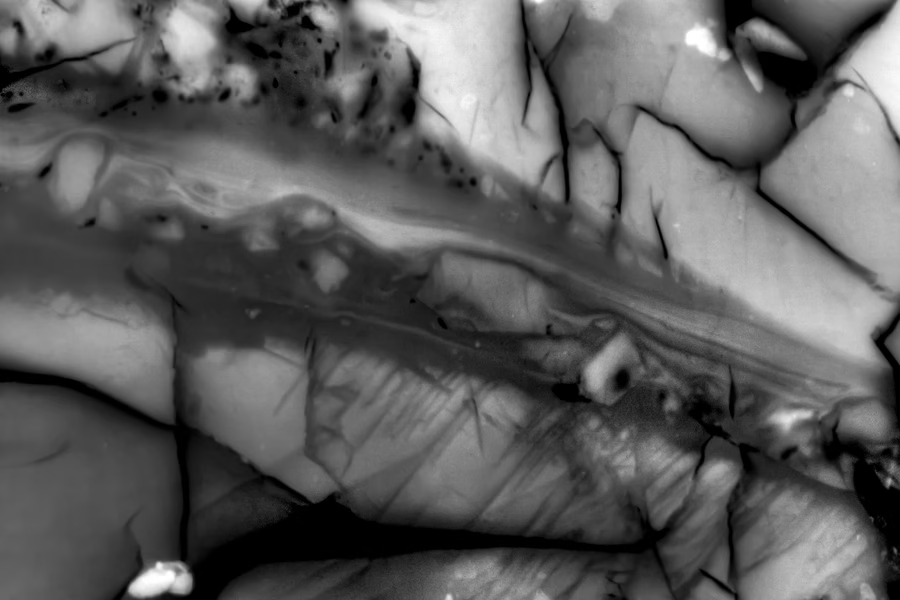

When an earthquake strikes, we experience its violent shaking on the surface. But new research from MIT shows that most of a quake’s energy actually goes into something entirely different — heat.

Using miniature “lab quakes” designed to mimic real seismic slips deep underground, geologists at MIT have, for the first time, mapped the full energy budget of an earthquake. Their study reveals that only about 10 percent of a quake’s energy translates into ground shaking, while less than 1 percent goes into fracturing rock. The vast majority — nearly 80 percent — is released as heat at the fault, sometimes creating sudden spikes hot enough to melt surrounding rock.

“These results show that what happens deep underground is far more dynamic than what we feel on the surface,” said Daniel Ortega-Arroyo, a graduate researcher in MIT’s Department of Earth, Atmospheric and Planetary Sciences, in a media statement. “A rock’s deformation history — essentially its memory of past seismic shifts — dictates how much energy ends up in shaking, breaking, or heating. That history plays a big role in determining how destructive a quake can be.”

The team’s findings, published in AGU Advances, suggest that understanding a fault zone’s “thermal footprint” might be just as important as recording surface tremors. Laboratory-created earthquakes, though simplified models of natural ones, provide a rare window into processes that are otherwise impossible to observe deep within Earth’s crust.

MIT researchers created the “microshakes” by applying immense pressures to samples of granite mixed with magnetic particles that acted as ultra-sensitive heat gauges. By stacking the results of countless tiny quakes, they tracked exactly how the energy distributed among shaking, fracturing, and heating. Some events saw fault zones heat up to over 1,200 degrees Celsius in mere microseconds, momentarily liquefying parts of the rock before cooling again.

“We could never reproduce the full complexity of Earth, so we simplify,” explained co-author Matěj Peč, MIT associate professor of geophysics. “By isolating the physics in the lab, we can begin to understand the mechanisms that govern real earthquakes — and apply this knowledge to better models and risk assessments.”

The work also provides a fresh perspective on why some regions remain vulnerable long after previous seismic activity. Past quakes, by altering the structure and material properties of rocks, may influence how future ones unfold. If researchers can estimate how much heat was generated in past quakes, they might be able to assess how much stress still lingers underground — a factor that could refine earthquake forecasting.

The study was conducted by Ortega-Arroyo and Peč, along with colleagues from MIT, Harvard University, and Utrecht University.

Health

Giant Human Antibody Found to Act Like a Brace Against Bacterial Toxins

This synergistic bracing action gives IgM a unique advantage in neutralizing bacterial toxins that are exposed to mechanical forces inside the body

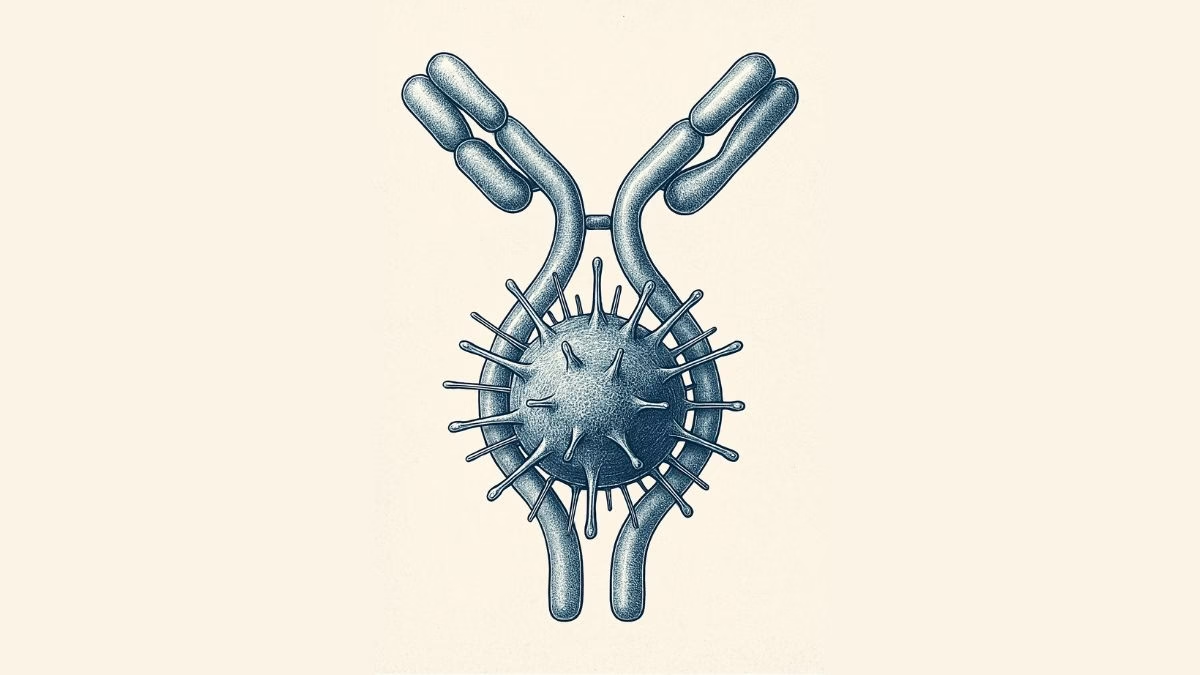

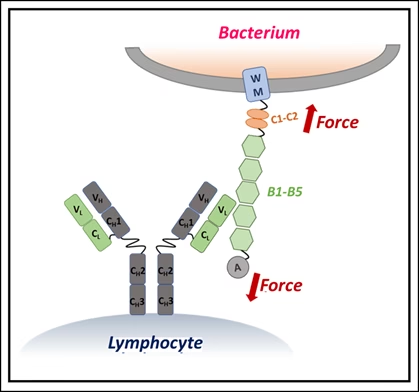

Our immune system’s largest antibody, IgM, has revealed a hidden superpower — it doesn’t just latch onto harmful microbes, it can also act like a brace, mechanically stabilizing bacterial toxins and stopping them from wreaking havoc inside our bodies.

A team of scientists from the S.N. Bose National Centre for Basic Sciences (SNBNCBS) in Kolkata, India, an autonomous institute under the Department of Science and Technology (DST), made this discovery in a recent study. The team reports that IgM can mechanically stiffen bacterial proteins, preventing them from unfolding or losing shape under physical stress.

“This changes the way we think about antibodies,” the researchers said in a media statement. “Traditionally, antibodies are seen as chemical keys that unlock and disable pathogens. But we show they can also serve as mechanical engineers, altering the physical properties of proteins to protect human cells.”

Unlocking a new antibody role

Our immune system produces many different antibodies, each with a distinct function. IgM, the largest and one of the very first antibodies generated when our body detects an infection, has long been recognized for its front-line defense role. But until now, little was known about its ability to physically stabilize dangerous bacterial proteins.

The SNBNCBS study focused on Protein L, a molecule produced by Finegoldia magna. This bacterium is generally harmless but can become pathogenic in certain situations. Protein L acts as a “superantigen,” binding to parts of antibodies in unusual ways and interfering with immune responses.

Using single-molecule force spectroscopy — a high-precision method that applies minuscule forces to individual molecules — the researchers discovered that when IgM binds Protein L, the bacterial protein becomes more resistant to mechanical stress. In effect, IgM braces the molecule, preventing it from unfolding under physiological forces, such as those exerted by blood flow or immune cell pressure.

Why size matters

The stabilizing effect depended on IgM concentration: more IgM meant stronger resistance. Simulations showed that this is because IgM’s large structure carries multiple binding sites, allowing it to clamp onto Protein L at several locations simultaneously. Smaller antibodies lack this kind of stabilizing network.

“This synergistic bracing action gives IgM a unique advantage in neutralizing bacterial toxins that are exposed to mechanical forces inside the body,” the researchers explained.

The finding highlights an overlooked dimension of how our immune system works — antibodies don’t merely bind chemically but can also act as mechanical modulators, physically disarming toxins.

Such insights could open a new frontier in drug development, where future therapies may involve engineering antibodies to stiffen harmful proteins, effectively locking them in a harmless state.

The study suggests that by harnessing this natural bracing mechanism, scientists may be able to design innovative treatments that go beyond traditional antibody functions.

Math

Researchers Unveil Breakthrough in Efficient Machine Learning with Symmetric Data

MIT researchers have developed the first mathematically proven method for training machine learning models that can efficiently interpret symmetric data—an advance that could significantly enhance the accuracy and speed of AI systems in fields ranging from drug discovery to climate analysis.

In traditional drug discovery, for example, a human looking at a rotated image of a molecule can easily recognize it as the same compound. However, standard machine learning models may misclassify the rotated image as a completely new molecule, highlighting a blind spot in current AI approaches. This shortcoming stems from the concept of symmetry, where an object’s fundamental properties remain unchanged even when it undergoes transformations like rotation.

“If a drug discovery model doesn’t understand symmetry, it could make inaccurate predictions about molecular properties,” the researchers explained. While some empirical techniques have shown promise, there was previously no provably efficient way to train models that rigorously account for symmetry—until now.

“These symmetries are important because they are some sort of information that nature is telling us about the data, and we should take it into account in our machine-learning models. We’ve now shown that it is possible to do machine-learning with symmetric data in an efficient way,” said Behrooz Tahmasebi, MIT graduate student and co-lead author of the new study, in a media statement.

The research, recently presented at the International Conference on Machine Learning, is co-authored by fellow MIT graduate student Ashkan Soleymani (co-lead author), Stefanie Jegelka (associate professor of EECS, IDSS member, and CSAIL member), and Patrick Jaillet (Dugald C. Jackson Professor of Electrical Engineering and Computer Science and principal investigator at LIDS).

Rethinking how AI sees the world

Symmetric data appears across numerous scientific disciplines. For instance, a model capable of recognizing an object irrespective of its position in an image demonstrates such symmetry. Without built-in mechanisms to process these patterns, machine learning models can make more mistakes and require massive datasets for training. Conversely, models that leverage symmetry can work faster and with fewer data points.

“Graph neural networks are fast and efficient, and they take care of symmetry quite well, but nobody really knows what these models are learning or why they work. Understanding GNNs is a main motivation of our work, so we started with a theoretical evaluation of what happens when data are symmetric,” Tahmasebi noted.

The MIT researchers explored the trade-off between how much data a model needs and the computational effort required. Their resulting algorithm brings symmetry to the fore, allowing models to learn from fewer examples without spending excessive computing resources.

Blending algebra and geometry

The team combined strategies from both algebra and geometry, reformulating the problem so the machine learning model could efficiently process the inherent symmetries in the data. This innovative blend results in an optimization problem that is computationally tractable and requires fewer training samples.

“Most of the theory and applications were focusing on either algebra or geometry. Here we just combined them,” explained Tahmasebi.

By demonstrating that symmetry-aware training can be both accurate and efficient, the breakthrough paves the way for the next generation of neural network architectures, which promise to be more precise and less resource-intensive than conventional models.

“Once we know that better, we can design more interpretable, more robust, and more efficient neural network architectures,” added Soleymani.

This foundational advance is expected to influence future research in diverse applications, including materials science, astronomy, and climate modeling, wherever symmetry in data is a key feature.

Space & Physics5 months ago

Space & Physics5 months agoIs Time Travel Possible? Exploring the Science Behind the Concept

Know The Scientist5 months ago

Know The Scientist5 months agoNarlikar – the rare Indian scientist who penned short stories

Society4 months ago

Society4 months agoShukla is now India’s first astronaut in decades to visit outer space

Earth5 months ago

Earth5 months agoWorld Environment Day 2025: “Beating plastic pollution”

Society5 months ago

Society5 months agoAxiom-4 will see an Indian astronaut depart for outer space after 41 years

The Sciences4 months ago

The Sciences4 months agoHow a Human-Inspired Algorithm Is Revolutionizing Machine Repair Models in the Wake of Global Disruptions

Space & Physics3 months ago

Space & Physics3 months agoJoint NASA-ISRO radar satellite is the most powerful built to date

Space & Physics6 months ago

Space & Physics6 months agoMIT Physicists Capture First-Ever Images of Freely Interacting Atoms in Space