Space & Physics

Why does superconductivity matter?

Superconductivity was discovered by H. Kamerlingh Onnes on April 8, 1911, who was studying the resistance of solid Mercury (Hg) at cryogenic temperatures. Liquid helium was recently discovered at that time. At T = 4.2K, the resistance of Hg disappeared abruptly. This marked a transition to a new phase that was never seen before. The state is resistanceless, strongly diamagnetic, and denotes a new state of matter. K. Onnes sent two reports to KNAW (the local journal of the Netherlands), where he preferred calling the zero-resistance state ‘superconductivity’’.

There was another discovery that went unnoticed in the same experiment, which was the transition of superfluid Helium (He) at 2.2K, the so-called λ transition, below which He becomes a superfluid. However, we shall skip that discussion for now. A couple of years later, superconductivity was found in lead (Pb) at 7K. Much later, in 1941, Niobium Nitride was found to superconduct below 16 K. The burning question in those days was: what would the conductivity or resistivity of metals be at a very low temperature?

The reason behind such a question is Lord Kelvin’s suggestion that for metals, initially the resistivity decreases with falling temperature and finally climbs to infinity at zero Kelvin because electrons’ mobility becomes zero at 0 K, yielding zero conductivity and hence infinite resistivity. Kamerlingh Onnes and his assistant Jacob Clay studied the resistance of gold (Au) and platinum (Pt) down to T = 14K. There was a linear decrease in resistance until 14 K; however, lower temperatures cannot be accessed owing to the unavailability of liquid He, which eventually happened in 1908.

In fact, the experiment with Au and Pt was repeated after 1908. For Pt, the resistivity became constant after 4.2K, while Au is found to superconduct at very low temperatures. Thus, Lord Kelvin’s notion about infinite resistivity at very low temperatures was incorrect. Onnes had found that at 3 K (below the transition), the normalised resistance is about 10−7. Above 4.2 K, the resistivity starts appearing again. The transition is too sharp and falls abruptly to zero within a temperature window of 10−4 K.

All superconductors are normal metals above the transition temperature. If we ask in the periodic table where most of the superconductors are located, the answer throws some surprises. The good metals are rarely superconducting

Perfect conductors, superconductors, and magnets

All superconductors are normal metals above the transition temperature. If we ask in the periodic table where most of the superconductors are located, the answer throws some surprises. The good metals are rarely superconducting. The examples are Ag, Au, Cu, Cs, etc., which have transition temperatures of the order of ∼ 0.1K, while the bad metals, such as niobium alloys, copper oxides, and 1 MgB2, have relatively larger transition temperatures. Thus, bad metals are, in general, good superconductors. An important quantity in this regard is the mean free path of the electrons. The mean free path is of the order of a few A0 for metals (above Tc), while for good metals (or the bad superconductors), it is usually a few hundred of A0. Whereas for the bad metals (good superconductors), it is still small as the electrons are strongly coupled to phonons. The orbital overlap is large in a superconductor. In good metals, the orbital overlap is small, and often they become good magnets. In the periodic table, transition elements such as the 3D series elements, namely Al, Bi, Cd, Ga, etc., become good superconductors, while Cr, Mn, and Fe are bad superconductors and in fact form good magnets. For all of them, that is, whether they are superconductors or magnets, there is a large density of states at the Fermi level. So, a lot of electronic states are necessary for the electrons in these systems to be able to condense into a superconducting state (or even a magnetic state). The nature of the electronic wave function determines whether they develop superconducting order or magnetic order. For example, electronic wavefunctions have a large spatial extent for superconductors, while they are short-range for magnets.

Meissner effect

The near-complete expulsion of the magnetic field from a superconducting specimen is called the Meissner effect. In the presence of a magnetic field, the current loops at the periphery will be generated so as to block the entry of the external field inside the specimen. If a magnetic field is allowed within a superconductor, then, by Ampere’s law, there will be normal current within the sample. However, there is no normal current inside the specimen. Thus, there can’t be any magnetic field. For this reason, superconductors are known as perfect diamagnets with very large diamagnetic susceptibility. Even the best-known diamagnets (which are non-superconductors) have magnetic susceptibilities of the order of 10−5. Thus, the diamagnetic property can be considered a distinct property of superconductors compared to zero electrical resistance.

The near-complete expulsion of the magnetic field from a superconducting specimen is called the Meissner effect

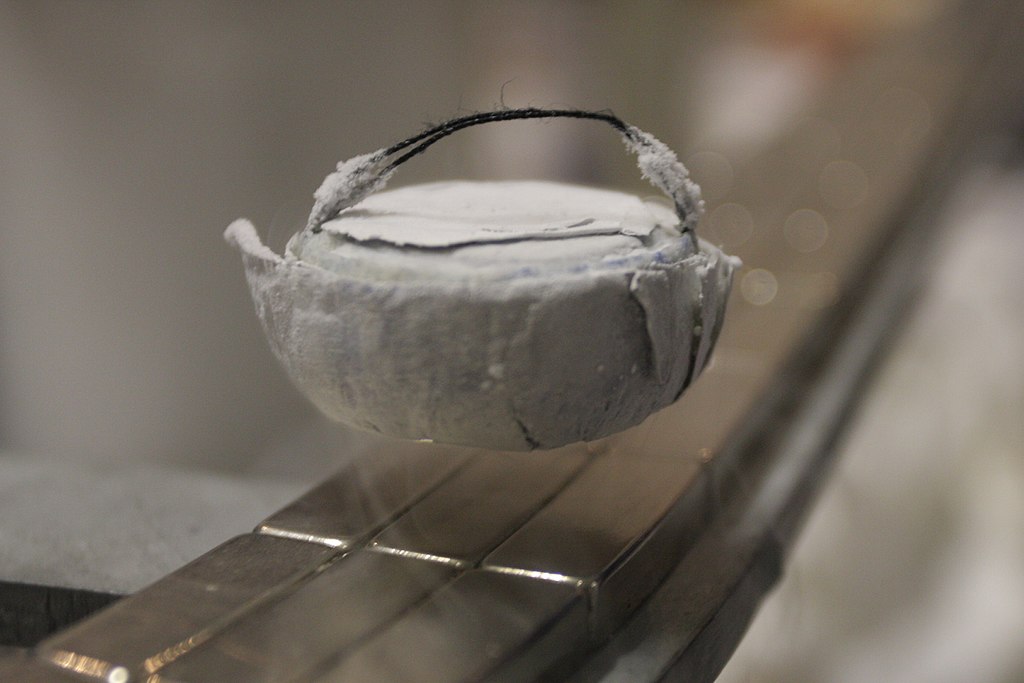

A typical experiment demonstrating the Meissner effect can be thought of as follows: Take a superconducting sample (T < Tc), sprinkle iron filings around the sample, and switch on the magnetic field. The iron filings are going to line up in concentric circles around the specimen. This implies the expulsion of the flux lines outside the sample, which makes the filings line up.

Distinction between perfect conductors and superconductors

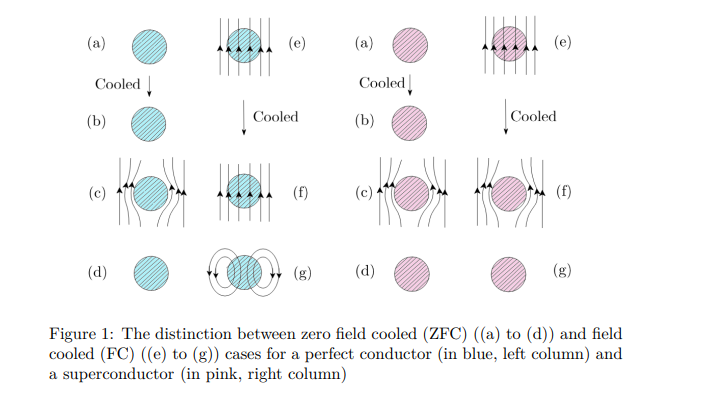

The distinction between a perfect conductor and a superconductor is brought about by magnetic field-cooled (FC) and zero-field-cooled (ZFc) cases, as shown below in Fig. 1.

In the absence of an external magnetic field, temperature is lowered for both the metal and the superconductor in their metallic states from T > Tc to T < Tc (see left panel for both in Fig. 1). Hence, a magnetic field is applied, which eventually gets expelled owing to the Meissner effect. The field has finally been withdrawn. However, if cooling is done in the presence of an external field, after the field is withdrawn, the flux lines get trapped for a perfect conductor; however, the superconductor is left with no memory of an applied field, a situation similar to what happens in the zero-field cooling case. So, superconductors have no memory, while perfect conductors have memory.

Microscopic considerations: BCS theory

The first microscopic theory of superconductivity was proposed by Berdeen, Cooper, and Schrieffer (BCS) in 1957, which earned them a Nobel Prize in 1972. The underlying assumption was that an attractive interaction between the electrons is possible, which is mediated via phonons. Thus, electrons form bound pairs under certain conditions, such as (i) two electrons in the vicinity of the filled Fermi Sea within an energy range ¯hωD (set by the phonons or lattice). (ii) The presence of phonons or the underlying lattice is confirmed by the isotope effect experiment, which confirms that the transition temperature is proportional to the mass of ions. Since the Debye frequency depends on the ionic mass, it implies that the lattice must be involved. 3 A small calculation yields that an attractive interaction is possible in a narrow range of energy. This attractive interaction causes the system to be unstable, and a long-range order develops via symmetry breaking. In a book by one of the discoverers, namely, Schrieffer, he described an analogy between a dancing floor comprising couples, dancing one with any other couple, and being completely oblivious to any other couple present in the room. The couples, while dancing, drift from one end of the room to another but do not collide with each other. This implies less dissipation in the transport of a superconductor. The BCS theory explained most of the features of the superconductors known at that time, such as (i) the discontinuity of the specific heat at the transition temperature, Tc. (ii) Involvement of the lattice via the isotope effect. (iii) Estimation of Tc and the energy gap. The value of Tc and the gap are confirmed by tunnelling experiments across metal-superconductor (M-S) or metal-insulator-superconductor (MIS) types of junctions. Giaever was awarded the Nobel Prize in 1973 for his work on these experiments. (iv) The Meissner effect can be explained within a linear response regime. (v) Temperature dependence of the energy gap, confirming gradual vanishing, which confirms a second-order phase transition. Most of the features of conventional superconductors can be explained using BCS theory. Another salient feature of the theory is that it is non-perturbative. There is no small parameter in the problem. The calculations were done with a variational theory where the energy is minimised with respect to some free parameters of the variational wavefunction, known as the BCS wavefunction.

Unconventional Superconductors: High-Tc Cuprates

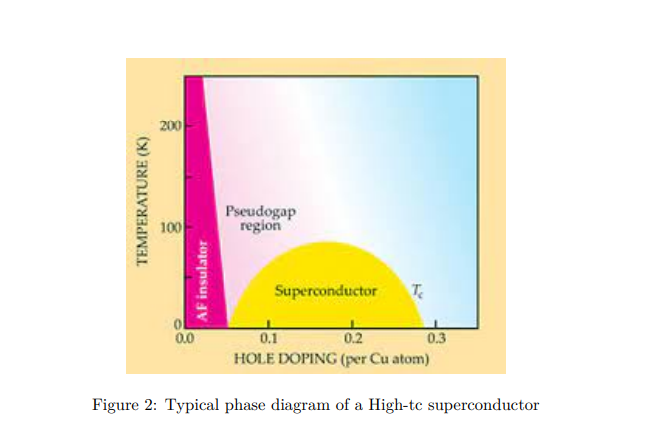

This is a class of superconductors where the two-dimensional copper oxide planes play the main role, and superconductivity occurs in these planes. Doping these planes with mobile carriers makes the system unstable towards superconducting correlations. At zero doping, the system is an antiferromagnetic insulator (see Fig. 2). With about 15% to 20% doping with foreign elements, such as strontium (Sr), etc. (for example, in La2−xSrxCuO4), the system turns superconductivity. There are two things that are surprising in this regard. (i) The proximity of the insulating state to the superconducting state; (ii) For the system initially in the superconducting state, as the temperature is raised, instead of going into a metallic state, it shows several unfamiliar features that are very unlike the known Fermi liquid characteristics. It is called a strange metal.

In fact, there are some signatures of pre-formed pairs in the ‘so-called’ metallic state, known as the pseudo gap phase. Since the starting point from which one should build a theory is missing, a complete understanding of the mechanism leading to the phenomenon cannot be understood. It remained a theoretical riddle.

Space & Physics

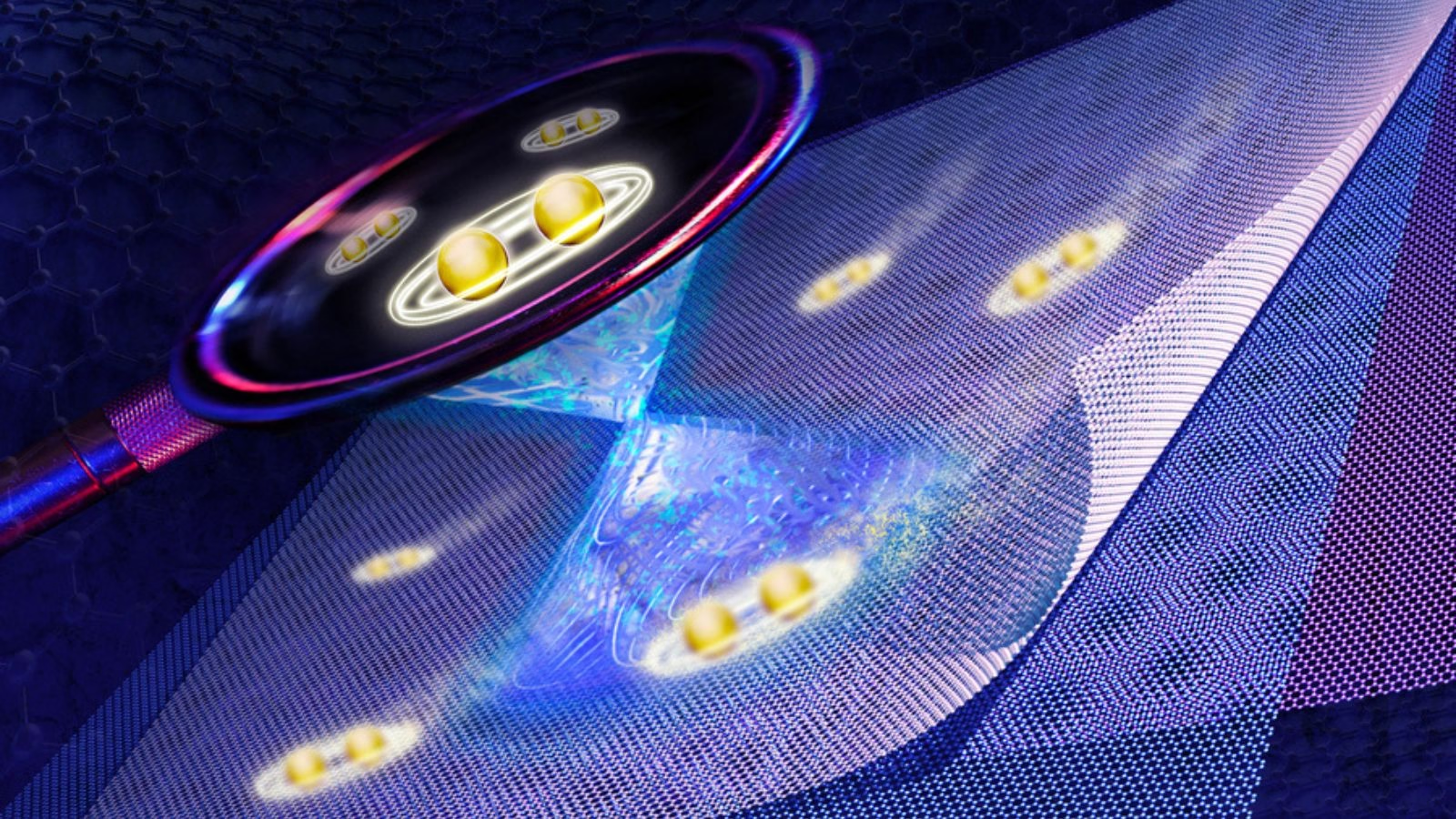

MIT Pioneers Real-Time Observation of Unconventional Superconductivity in Magic-Angle Graphene

Physicists have directly observed unconventional superconductivity in magic-angle twisted tri-layer graphene using a new experimental platform, revealing a unique pairing mechanism

MIT physicists have unveiled compelling direct evidence for unconventional superconductivity in “magic-angle” twisted tri-layer graphene—an atomically engineered material that could reimagine the future of energy transport and quantum technologies. Their new experiment marks a pivotal step forward, offering a fresh perspective on how electrons synchronize in precisely stacked two-dimensional materials, potentially laying the groundwork for next-generation superconductors that function well above current temperature limits.

Instead of looking merely at theoretical possibilities, the MIT team built a novel platform that lets researchers visualize the superconducting gap “as it emerges in real-time within 2D materials,” said co-lead author Shuwen Sun in a media statement. This gap is crucial, reflecting how robust the material’s superconducting state is during temperature changes—a key indicator for practical applications.

What’s striking, said Jeong Min Park, study co-lead author, is that the superconducting gap in magic-angle graphene differs starkly from the smooth, uniform profile seen in conventional superconductors. “We observed a V-shaped gap that reveals an entirely new pairing mechanism—possibly driven by the electrons themselves, rather than crystal vibrations,” Park said. Such direct measurement is a “first” for the field, giving scientists a more refined tool for identifying and understanding unconventional superconductivity.

Senior author Pablo Jarillo-Herrero emphasized that their method could help crack the code behind room-temperature superconductors: “This breakthrough may trigger deeper insights not just for graphene, but for the entire class of twistronic materials. Imagine grids and quantum computers that operate with zero energy loss—this is the holy grail we’re moving toward,” Jarillo-Herrero said in the MIT release.

Collaborators included scientists from Japan’s National Institute for Materials Science, broadening the impact of the research. The discovery builds on years of progress since the first magic-angle graphene experiments in 2018, opening what many now call the “twistronics” era—a field driven by stacking and twisting atom-thin materials to unlock uniquely quantum properties.

Looking ahead, the team plans to expand its analysis to other ultra-thin structures, hoping to map out electronic behavior not only for superconductors, but for a wider range of correlated quantum phases. “We can now directly observe electron pairs compete and coexist with other quantum states—this could allow us to design new materials from the ground up,” said Park in her public statement.

The research underscores the value of visualization in fundamental physics, suggesting that direct observation may be the missing link to controlling quantum phenomena for efficient, room-temperature technology.

Space & Physics

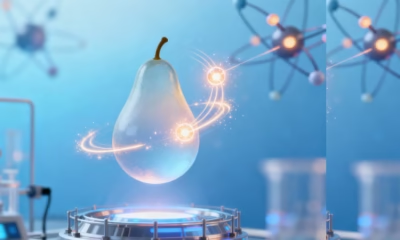

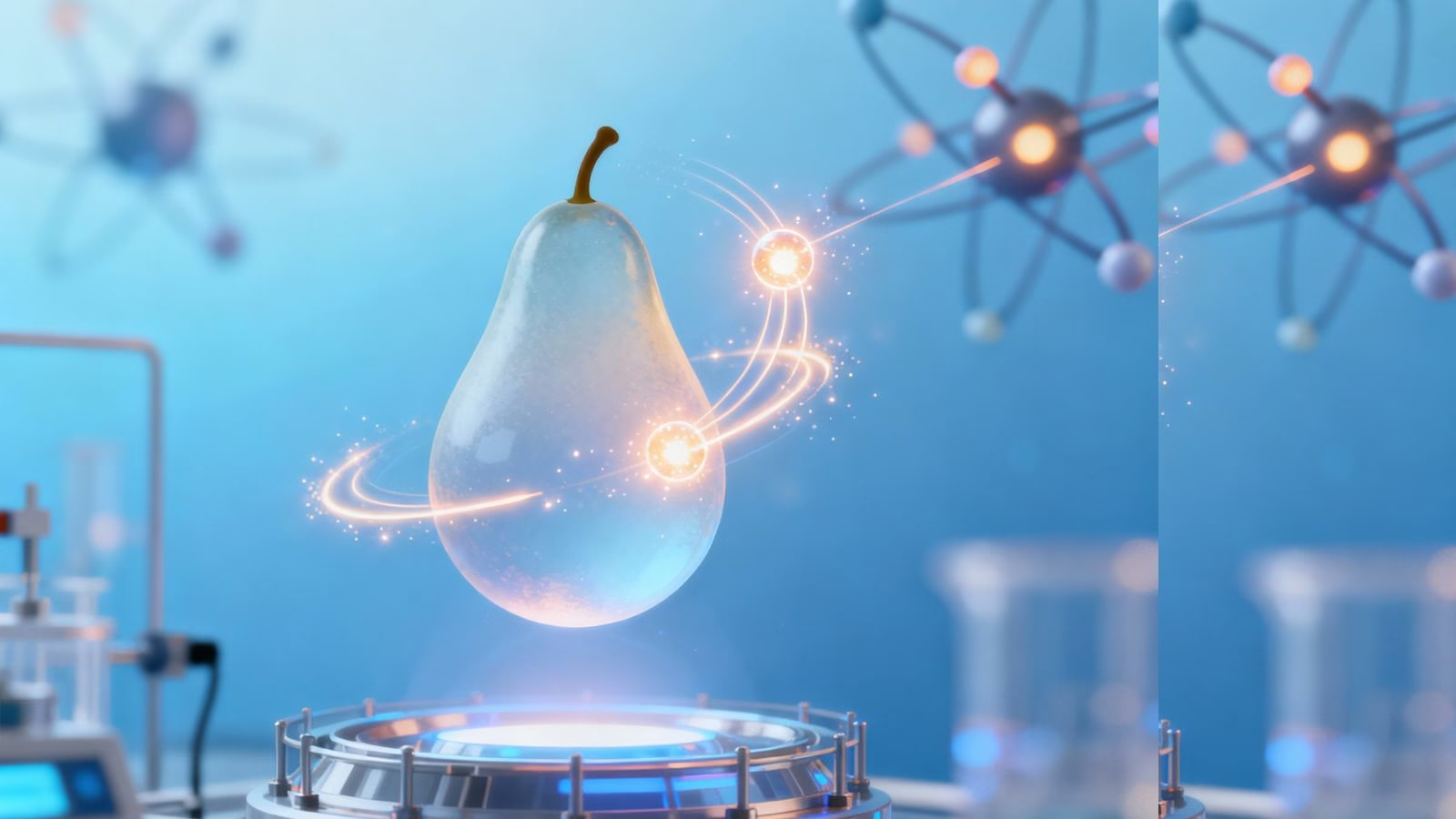

Atoms Speak Out: Physicists Use Electrons as Messengers to Unlock Secrets of the Nucleus

Physicists at MIT have devised a table-top method to peer inside an atom’s nucleus using the atom’s own electrons

Physicists at MIT have developed a pioneering method to look inside an atom’s nucleus — using the atom’s own electrons as tiny messengers within molecules rather than massive particle accelerators.

In a study published in science, the researchers demonstrated this approach using molecules of radium monofluoride, which pair a radioactive radium atom with a fluoride atom. The molecules act like miniature laboratories where electrons naturally experience extremely strong electric fields. Under these conditions, some electrons briefly penetrate the radium nucleus, interacting directly with protons and neutrons inside. This rare intrusion leaves behind a measurable energy shift, allowing scientists to infer details about the nucleus’ internal structure.

The team observed that these energy shifts, though minute — about one millionth of the energy of a laser photon — provide unambiguous evidence of interactions occurring inside the nucleus rather than outside it. “We now have proof that we can sample inside the nucleus,” said Ronald Fernando Garcia Ruiz, the Thomas A. Franck Associate Professor of Physics at MIT, in a statement. “It’s like being able to measure a battery’s electric field. People can measure its field outside, but to measure inside the battery is far more challenging. And that’s what we can do now.”

Traditionally, exploring nuclear interiors required kilometer-long particle accelerators to smash high-speed beams of electrons into targets. The MIT technique, by contrast, achieves similar insight with a table-top molecular setup. It makes use of the molecule’s natural electric environment to magnify these subtle interactions.

The radium nucleus, unlike most which are spherical, has an asymmetric “pear” shape that makes it a powerful system for studying violations of fundamental physical symmetries — phenomena that could help explain why the universe contains far more matter than antimatter. “The radium nucleus is predicted to be an amplifier of this symmetry breaking, because its nucleus is asymmetric in charge and mass, which is quite unusual,” Garcia Ruiz explained.

To conduct their experiments, the researchers produced radium monofluoride molecules at CERN’s Collinear Resonance Ionization Spectroscopy (CRIS) facility, trapped and cooled them in laser-guided chambers, and then measured laser-induced energy transitions with extreme precision. The work involved MIT physicists Shane Wilkins, Silviu-Marian Udrescu, and Alex Brinson, alongside international collaborators.

“Radium is naturally radioactive, with a short lifetime, and we can currently only produce radium monofluoride molecules in tiny quantities,” said Wilkins. “We therefore need incredibly sensitive techniques to be able to measure them.”

As Udrescu added, “When you put this radioactive atom inside of a molecule, the internal electric field that its electrons experience is orders of magnitude larger compared to the fields we can produce and apply in a lab. In a way, the molecule acts like a giant particle collider and gives us a better chance to probe the radium’s nucleus.”

Going forward, the MIT team aims to cool and align these molecules so that the orientation of their pear-shaped nuclei can be controlled for even more detailed mapping. “Radium-containing molecules are predicted to be exceptionally sensitive systems in which to search for violations of the fundamental symmetries of nature,” Garcia Ruiz said. “We now have a way to carry out that search”

Space & Physics

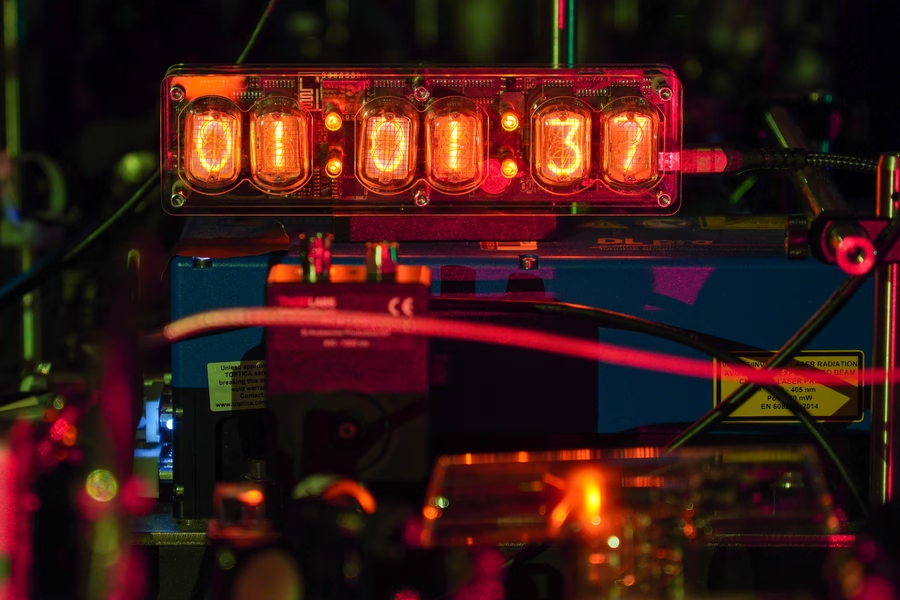

Physicists Double Precision of Optical Atomic Clocks with New Laser Technique

MIT researchers develop a quantum-enhanced method that doubles the precision and stability of optical atomic clocks, paving the way for portable, ultra-accurate timekeeping.

MIT physicists have unveiled a new technique that could significantly improve the precision and stability of next-generation optical atomic clocks, devices that underpin everything from mobile transactions to navigation apps. In a recent media statement, the MIT team explained: “Every time you check the time on your phone, make an online transaction, or use a navigation app, you are depending on the precision of atomic clocks. An atomic clock keeps time by relying on the ‘ticks’ of atoms as they naturally oscillate at rock-steady frequencies.”

Current atomic clocks rely on cesium atoms tracked with lasers at microwave frequencies, but scientists are advancing to clocks based on faster-ticking atoms like ytterbium, which can be tracked with lasers at higher, optical frequencies and discern intervals up to 100 trillion times per second.

A research group at MIT, led by Vladan Vuletić, the Lester Wolfe Professor of Physics, detailed that their newly developed method harnesses a laser-induced “global phase” in ytterbium atoms and boosts this effect using quantum amplification. Vuletić stated, “We think our method can help make these clocks transportable and deployable to where they’re needed.” The approach, called global phase spectroscopy, doubles the precision of an optical atomic clock, enabling it to resolve twice as many ticks per second compared to standard setups, and promises further gains with increasing atom counts.

The technique could pave the way for portable optical atomic clocks able to measure all manner of phenomena in various locations. Vuletić summarized the broader scientific ambitions: “With these clocks, people are trying to detect dark matter and dark energy, and test whether there really are just four fundamental forces, and even to see if these clocks can predict earthquakes.”

The MIT team has previously demonstrated improved clock precision by quantumly entangling hundreds of ytterbium atoms and using time reversal tricks to amplify their signals. Their latest advance applies these methods to much faster optical frequencies, where stabilizing the clock laser has always been a major challenge. “When you have atoms that tick 100 trillion times per second, that’s 10,000 times faster than the frequency of microwaves,” said Vuletić in the statement. Their experiments revealed a surprisingly useful “global phase” information about the laser frequency, previously thought irrelevant, unlocking the potential for even greater accuracy.

The research, led by Vuletić and joined by Leon Zaporski, Qi Liu, Gustavo Velez, Matthew Radzihovsky, Zeyang Li, Simone Colombo, and Edwin Pedrozo-Peñafiel of the MIT-Harvard Center for Ultracold Atoms, was published in Nature. They believe the technical benefits of the new method will make atomic clocks easier to run and enable stable, transportable clocks fit for future scientific exploration, including earthquake prediction, fundamental physics, and global time standards.

-

Space & Physics6 months ago

Space & Physics6 months agoIs Time Travel Possible? Exploring the Science Behind the Concept

-

Know The Scientist6 months ago

Know The Scientist6 months agoNarlikar – the rare Indian scientist who penned short stories

-

Know The Scientist5 months ago

Know The Scientist5 months agoRemembering S.N. Bose, the underrated maestro in quantum physics

-

Space & Physics3 months ago

Space & Physics3 months agoJoint NASA-ISRO radar satellite is the most powerful built to date

-

Society5 months ago

Society5 months agoAxiom-4 will see an Indian astronaut depart for outer space after 41 years

-

Society5 months ago

Society5 months agoShukla is now India’s first astronaut in decades to visit outer space

-

Society5 months ago

Society5 months agoWhy the Arts Matter As Much As Science or Math

-

Earth5 months ago

Earth5 months agoWorld Environment Day 2025: “Beating plastic pollution”