Technology

Researchers Crack Open the ‘Black Box’ of Protein AI Models

The approach could accelerate drug target identification, vaccine research, and new biological discoveries.

For years, artificial intelligence models that predict protein structures and functions have been critical tools in drug discovery, vaccine development, and therapeutic antibody design. But while these protein language models (PLMs), often built on large language models (LLMs), deliver impressively accurate predictions, researchers have been unable to see how the models arrive at those decisions — until now.

In a study published this week in the Proceedings of the National Academy of Sciences (PNAS), a team of MIT researchers unveiled a novel method to interpret the inner workings of these black-box models. By shedding light on the features that influence predictions, the approach could accelerate drug target identification, vaccine research, and new biological discoveries.

Cracking the protein ‘black box’

“Protein language models have been widely used for many biological applications, but there’s always been a missing piece: explainability,” said Bonnie Berger, Simons Professor of Mathematics and head of the Computation and Biology group in MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). In a media statement, she explained, “Our work has broad implications for enhanced explainability in downstream tasks that rely on these representations. Additionally, identifying features that protein language models track has the potential to reveal novel biological insights.”

The study was led by MIT graduate student Onkar Gujral, with contributions from Mihir Bafna, also a graduate student, and Eric Alm, professor of biological engineering at MIT.

From AlphaFold to explainability

Protein modelling took off in 2018 when Berger and then-graduate student Tristan Bepler introduced the first protein language model. These models, much like ChatGPT processes words, analyze amino acid sequences to predict protein structure and function. Their innovations paved the way for powerful systems like AlphaFold, ESM2, and OmegaFold, transforming the fields of bioinformatics and molecular biology.

Yet, despite their predictive power, researchers remained in the dark about why a model reached certain conclusions. “We would get out some prediction at the end, but we had absolutely no idea what was happening in the individual components of this black box,” Berger noted.

The sparse autoencoder approach

To address this challenge, the MIT team employed a technique called a sparse autoencoder — an algorithm originally used to interpret LLMs. Sparse autoencoders expand the representation of a protein across thousands of neural nodes, making it easier to distinguish which specific features influence the prediction.

“In a sparse representation, the neurons lighting up are doing so in a more meaningful manner,” explained Gujral in a media statement. “Before the sparse representations are created, the networks pack information so tightly together that it’s hard to interpret the neurons.”

By analyzing these expanded representations using AI assistance from Claude, the researchers could link specific nodes to biological features such as protein families, molecular functions, or even their location in a cell. For instance, one node could be identified as signalling proteins involved in transmembrane ion transport.

Implications for drug discovery and biology

This new transparency could be transformational for drug design and vaccine development, allowing scientists to select the most reliable models for specific biomedical tasks. Moreover, the study suggests that as AI models become more powerful, they could reveal previously undiscovered biological patterns.

“Understanding what features protein models encode means researchers can fine-tune inputs, select optimal models, and potentially even uncover new biological insights from the models themselves,” Gujral said. “At some point, when these models get more powerful, you could learn more biology than you already know just from opening up the models.”

Technology

From Tehran Rooftops To Orbit: How Elon Musk Is Reshaping Who Controls The Internet

How Starlink turned the sky into a battleground for digital power — and why one private network now challenges the sovereignty of states

On a rooftop in northern Tehran, long after midnight, a young engineering student adjusts a flat white dish toward the sky. The city around him is digitally dark—mobile data throttled, social media blocked, foreign websites unreachable. Yet inside his apartment, a laptop screen glows with Telegram messages, BBC livestreams, and uncensored access to the outside world.

Scenes like this have appeared repeatedly in footage from Iran’s unrest broadcast by international news channels.

But there’s a catch. The connection does not travel through Iranian cables or telecom towers. It comes from space.

Above him, hundreds of kilometres overhead, a small cluster of satellites belonging to Elon Musk’s Starlink network relays his data through the vacuum of orbit, bypassing the state entirely.

For governments built on control of information, this is no longer a technical inconvenience. It is a political nightmare. The image is quietly extraordinary. Not because of the technology — that story is already familiar — but because of what it represents: a private satellite network, owned by a US billionaire, now functioning as a parallel communications system inside a sovereign state that has deliberately tried to shut its citizens offline.

The Rise of an Unstoppable Network

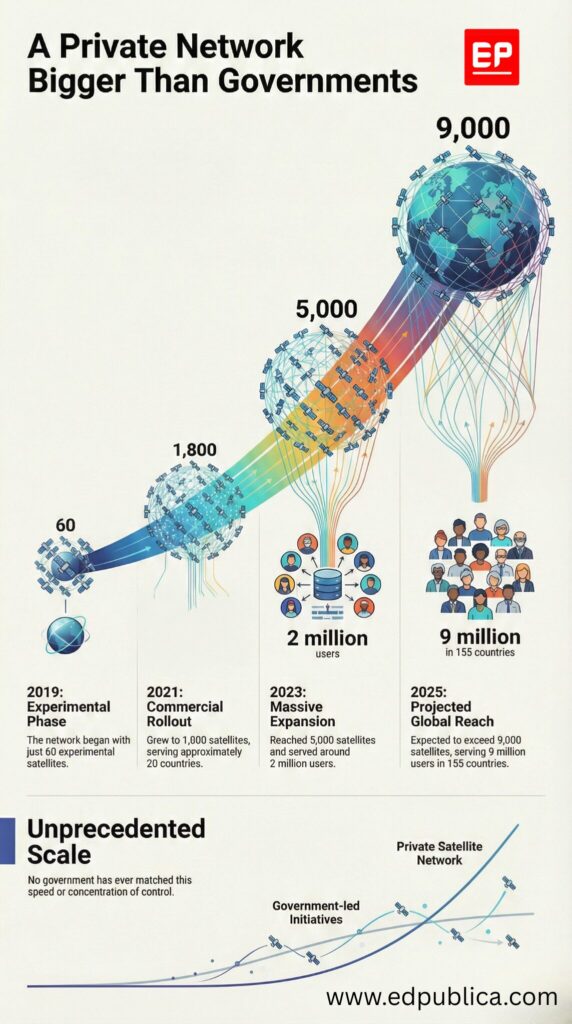

Starlink, operated by Musk’s aerospace company SpaceX, has quietly become the most ambitious communications infrastructure ever built by a private individual.

As of late 2025, more than 9,000 Starlink satellites orbit Earth in low Earth orbit (LEO) (SpaceX / industry trackers, 2025). According to a report in Business Insider, the network serves over 9 million active users globally, and Starlink now operates in more than 155 countries and territories (Starlink coverage data, 2025).

It is the largest satellite constellation in human history, dwarfing every government system combined.

This is not merely a technology story. It is a power story.

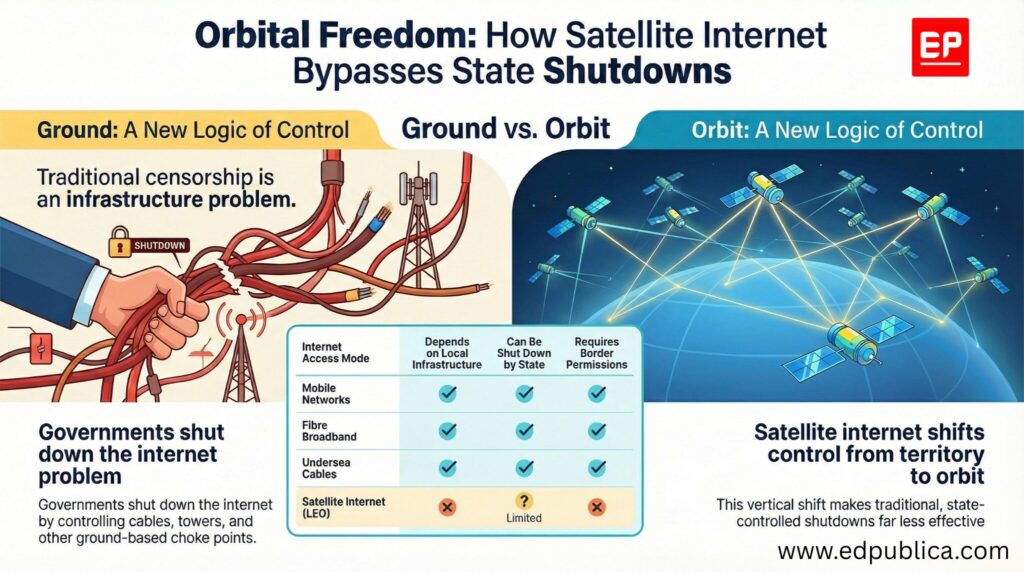

Unlike traditional internet infrastructure — fibre cables, mobile towers, undersea routes — Starlink’s backbone exists in space. It does not cross borders. It does not require landing rights in the conventional sense. And, increasingly, it does not ask permission.

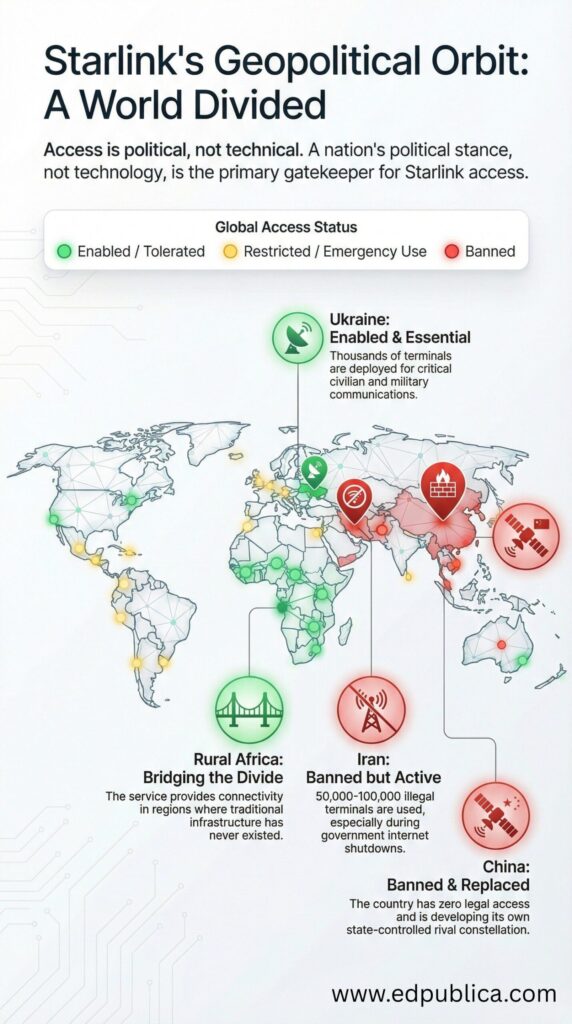

Iran: When the Sky Replaced the State

During successive waves of anti-government protests in Iran, authorities imposed sweeping internet shutdowns: mobile networks crippled, platforms blocked, bandwidth throttled to near zero. These tactics, used repeatedly since 2019, were designed to isolate protesters from each other and from the outside world.

They did not fully anticipate space-based internet.

By late 2024 and 2025, Starlink terminals had begun appearing clandestinely across Iranian cities, smuggled through borders or carried in by diaspora networks. Possession is illegal. Penalties are severe. Yet the demand has grown.

Because the network operates without local infrastructure, users can communicate with foreign media, upload protest footage in real time, coordinate securely beyond state surveillance, and maintain access even during nationwide blackouts.

The numbers are necessarily imprecise, but multiple independent estimates provide a sense of scale. Analysts at BNE IntelliNews estimated over 30,000 active Starlink users inside Iran by 2025.

Iranian activist networks suggest the number of physical terminals may be between 50,000 and 100,000, many shared across neighbourhoods. Earlier acknowledgements from Elon Musk confirmed that SpaceX had activated service coverage over Iran despite the lack of formal licensing.

This is what alarms governments most: the state no longer controls the kill switch.

Ukraine: When One Man Could Switch It Off

The power — and danger — of this new infrastructure became even clearer in Ukraine.

After Russia’s 2022 invasion, Starlink terminals were shipped in by the thousands to keep Ukrainian communications alive. Hospitals, emergency services, journalists, and frontline military units all relied on it. For a time, Starlink was celebrated as a technological shield for democracy.

Then came the uncomfortable reality.

Investigative reporting later revealed that Elon Musk personally intervened in decisions about where Starlink would and would not operate. In at least one documented case, coverage was restricted near Crimea, reportedly to prevent Ukrainian drone operations against Russian naval assets.

The implications were stark: A private individual, accountable to no electorate, had the power to influence the operational battlefield of a sovereign war. Governments noticed.

Digital Sovereignty in the Age of Orbit

For decades, states have understood sovereignty to include control of national telecom infrastructure, regulation of internet providers, the legal authority to impose shutdowns, the power to filter, censor, and surveil.

Starlink disrupts all of it.

Because, the satellites are in space, outside national jurisdiction. Access can be activated remotely by SpaceX, and the terminals can be smuggled like USB devices. Traffic can bypass domestic data laws entirely.

In effect, Starlink represents a parallel internet — one that states cannot fully regulate, inspect, or disable without extraordinary countermeasures such as satellite jamming or physical raids.

Authoritarian regimes view this as foreign interference. Democratic governments increasingly see it as a strategic vulnerability. Either way, the monopoly problem is the same: A single corporate network, controlled by one individual, increasingly functions as critical global infrastructure.

How the Technology Actually Works

The power of Starlink lies in its architecture. Traditional internet depends on fibre-optic cables across cities and oceans, local internet exchanges, mobile towers and ground stations, and centralised chokepoints.

Starlink bypasses most of this. Instead, it uses thousands of LEO satellites orbiting at ~550 km altitude, user terminals (“dishes”) that automatically track satellites overhead, inter-satellite laser links, allowing data to travel from satellite to satellite in space, and a limited number of ground gateways connecting the system to the wider internet.

This design creates resilience: No single tower to shut down, no local ISP to regulate, and no fibre line to cut.

For protesters, journalists, and dissidents, this is transformative. For governments, it is destabilising.

A Private Citizen vs the Rules of the Internet

The global internet was built around multistakeholder governance: National regulators, international bodies like the ITU, treaties governing spectrum use, and complex norms around cross-border infrastructure.

Starlink bypasses much of this through sheer technical dominance, and it has become a company that: owns the rockets, owns the satellites, owns the terminals, controls activation, controls pricing, controls coverage zones… effectively controls a layer of global communication.

This is why policymakers now speak openly of “digital sovereignty at risk”. It is no longer only China’s Great Firewall or Iran’s censorship model under scrutiny. It is the idea that global connectivity itself might be increasingly privatised, personalised, and politically unpredictable.

The Unanswered Question

Starlink undeniably delivers real benefits, it offers connectivity in disaster zones, internet access in rural Africa, emergency communications in war, educational access where infrastructure never existed.

But it also raises an uncomfortable, unresolved question: Should any individual — however visionary, however innovative — hold this much power over who gets access to the global flow of information?

Today, a protester in Tehran can speak to the world because Elon Musk chooses to allow it.

Tomorrow, that access could disappear just as easily — with a policy change, a commercial decision, or a geopolitical calculation.The sky has become infrastructure. Infrastructure has become power. And power, increasingly, belongs not to states — but to a handful of corporations.

There is another layer to this power calculus — and it is economic. While Starlink has been quietly enabled over countries such as Iran without formal approval, China remains a conspicuous exception. The reason is less technical than commercial. Elon Musk’s wider business empire, particularly Tesla, is deeply entangled with China’s economy. Shanghai hosts Tesla’s largest manufacturing facility in the world, responsible for more than half of the company’s global vehicle output, and Chinese consumers form one of Tesla’s most critical markets.

Chinese authorities, in turn, have made clear their hostility to uncontrolled foreign satellite internet, viewing it as a threat to state censorship and information control. Beijing has banned Starlink terminals, restricted their military use, and invested heavily in its own rival satellite constellation. For Musk, activating Starlink over China would almost certainly provoke regulatory retaliation that could jeopardise Tesla’s operations, supply chains, and market access. The result is an uncomfortable contradiction: the same technology framed as a tool of freedom in Iran or Ukraine is conspicuously absent over China — a reminder that even a supposedly borderless internet still bends to the gravitational pull of corporate interests and geopolitical power.

Society

Reliance to build India’s largest AI-ready data centre, positions Gujarat as global AI hub

As part of making Gujarat India’s artificial intelligence pioneer, in Jamnagar we are building India’s largest AI-ready data centre: Mukesh Ambani

Reliance Industries Limited, India’s largest business group, has announced plans to build the country’s largest artificial intelligence–ready data centre in Jamnagar, a coastal industrial city in the western Indian state of Gujarat, as part of a broader push to expand access to AI technologies at population scale.

The announcement was made by Mukesh Ambani, chairman and managing director of Reliance Industries, during the Vibrant Gujarat Regional Conference for the Kutch and Saurashtra region, a government-led investment and development forum focused on regional economic growth.

Ambani said the Jamnagar facility is being developed with a single objective: “Affordable AI for every Indian.” He positioned the project as a foundational investment in India’s digital infrastructure, aimed at enabling large-scale adoption of artificial intelligence across sectors including industry, services, education and public administration.

“As part of making Gujarat India’s artificial intelligence pioneer, in Jamnagar we are building India’s largest AI-ready data centre,” Ambani said, adding that the facility is intended to support widespread access to AI tools for individuals, enterprises and institutions.

Reliance also announced that its digital arm, Jio, will launch a “people-first intelligence platform,” designed to deliver AI services in multiple languages and across consumer devices. According to Ambani, the platform is being built in India for both domestic and international users, with a focus on everyday productivity and digital inclusion.

The AI initiative forms part of Reliance’s broader commitment to invest approximately Rs 7 trillion (about USD 85 billion) in Gujarat over the next five years. The company said the investments are expected to generate large-scale employment while positioning the region as a hub for emerging technologies.

The Jamnagar AI data centre is being developed alongside what Reliance describes as the world’s largest integrated clean energy manufacturing ecosystem, encompassing solar power, battery storage, green hydrogen and advanced materials. Ambani said the city, historically known as a major hub for oil refining and petrochemicals, is being re-engineered as a centre for next-generation energy and digital technologies.

The announcements were made in the presence of Indian Prime Minister Narendra Modi and Gujarat Chief Minister Bhupendra Patel, underscoring the alignment between public policy and private investment in India’s long-term technology and infrastructure strategy.

Technology

India’s Global Patent Filings Are Rising. And One Company, Not Universities, Dominates the Chart

India’s global patent filings are no longer being driven primarily by universities or public laboratories. New data shows that a single private technology platform now accounts for a dominant share of the country’s international patent applications

Jio Platforms Limited, owned by India’s top billionaire Mukesh Ambani, has emerged as India’s largest filer of international patents in 2024–25, according to the Annual Report of the Office of the Controller General of Patents, Designs & Trade Marks, published by the Government of India. The scale of the lead is striking — and it highlights a deeper structural imbalance in India’s innovation ecosystem.

Jio Platforms Limited is the digital and technology arm of Reliance Industries Limited, India’s oil-to-telecom conglomerate. It functions as a holding company for Reliance Jio Infocomm and other digital businesses, with a focus on telecom services, broadband connectivity, cloud computing, artificial intelligence, Internet of Things (IoT), and advanced 5G solutions for both consumers and enterprises.

During the year, Jio Platforms filed 1,037 international patent applications, more than four times the filings of the next-ranked private company and over fourteen times those of India’s leading publicly funded research institution.

For comparison:

- TVS Motor Co filed 238 international patents

- CSIR filed 70 international patents

- IIT Madras filed 44 international patents

- Ola Electric Mobility filed 31 international patents

Other Indian universities and firms each filed in the low double or single digits.

Taken together, the combined international patent filings of entities ranked second through tenth still amounted to less than half of Jio Platforms’ total for the year.

Including domestic filings, Jio Platforms filed 1,654 patents in 2024–25. As of March 31, 2025, the company held 485 granted patents, largely concentrated in telecom networks, 5G and emerging 6G technologies, artificial intelligence, and digital infrastructure.

What the data shows — and what it doesn’t

The patent numbers reflect a sustained increase in corporate R&D activity at Jio Platforms, part of the broader Reliance Industries group. Reliance spent ₹4,185 crore on R&D in FY25, up from ₹3,643 crore the previous year. Over three years, its annual R&D expenditure has increased by more than ₹1,500 crore.

However, the patent data also raises uncomfortable questions. India’s publicly funded research ecosystem — including IITs, national laboratories and government-backed innovation programmes — contributes only a small fraction of the country’s internationally filed intellectual property.

For instance, all IITs combined filed fewer international patents than a single large private company, despite decades of public investment in technical education and research infrastructure.

Corporate deep tech versus public research

Reliance Industries’ leadership has framed this push as a transition toward deep technology development. At the company’s 2025 AGM, Mukesh Ambani said, “We are resolutely transforming our operating model to become a Deep-Tech company with advanced manufacturing capabilities.”

Akash Ambani added that Jio’s core technology stack — including its 5G core — has been developed by in-house engineering teams in India.

While these claims align with the patent data, they also underscore a broader shift: cutting-edge applied research in India is becoming increasingly concentrated within a few large corporate groups, rather than distributed across universities, startups and public labs.

How far is India from global R&D leaders?

Even with its rising patent output, Jio Platforms’ R&D spending remains small compared to global technology leaders:

- Alphabet (Google) spends over USD 40 billion annually on R&D

- Microsoft spends approximately USD 27–30 billion

- Amazon spends more than USD 80 billion on technology and research

In comparison, Reliance’s FY25 R&D spend translates to roughly USD 500 million, a fraction of what global firms invest each quarter.

What distinguishes Jio is not scale, but focus — a relatively high conversion of R&D spending into international patent filings, particularly in telecom infrastructure. Whether these patents translate into long-term technological leadership or commercial dominance remains to be seen.

A lopsided innovation landscape

The government recognition of Jio Platforms as India’s largest global IP creator is significant. But it also exposes a systemic challenge: India’s innovation pipeline is heavily skewed toward a small number of well-capitalised corporations, while public research institutions and startups struggle to compete at the global IP level.

If India’s ambition is to become a science and technology powerhouse, the data suggests that patent creation — especially internationally relevant IP — cannot remain so narrowly concentrated. A resilient innovation ecosystem depends not only on corporate R&D, but also on universities, independent labs and publicly funded science translating research into globally protected knowledge.

For now, Jio Platforms’ patent dominance stands less as a simple success story — and more as a mirror reflecting the structural gaps in India’s broader research and innovation system.

-

Society1 week ago

Society1 week agoThe Ten-Rupee Doctor Who Sparked a Health Revolution in Kerala’s Tribal Highlands

-

Space & Physics6 months ago

Space & Physics6 months agoNew double-slit experiment proves Einstein’s predictions were off the mark

-

Earth2 months ago

Earth2 months agoData Becomes the New Oil: IEA Says AI Boom Driving Global Power Demand

-

COP302 months ago

COP302 months agoCorporate Capture: Fossil Fuel Lobbyists at COP30 Hit Record High, Outnumbering Delegates from Climate-Vulnerable Nations

-

COP302 months ago

COP302 months agoBrazil Cuts Emissions by 17% in 2024—Biggest Drop in 16 Years, Yet Paris Target Out of Reach

-

Women In Science3 months ago

Women In Science3 months agoThe Data Don’t Lie: Women Are Still Missing from Science — But Why?

-

Space & Physics1 month ago

Space & Physics1 month agoIndian Physicists Win 2025 ICTP Prize for Breakthroughs in Quantum Many-Body Physics

-

Space & Physics6 months ago

Space & Physics6 months agoJoint NASA-ISRO radar satellite is the most powerful built to date